It's been a long time since I first took a look at recognizing hand-written digits using machine learning. Back when I first ran across the problem, I had no idea where to start. So instead of doing the machine learning bits, I did some visualization instead.

Then I got my hands on Mathias Brandewinder's book Machine Learning for .NET Developers, and he showed some basics that I incorporated into my visualization. I still didn't know where to go from there. Recently, I've been doing some more F# exploration, and that inspired some ideas on how I might improve the digit recognizers.

To take a look at the history of the Digit Display & Recognition project, check out the "Machine Learning (sort of)" articles listed here: Jeremy Explores Functional Programming.

Blurring the Results

My first stab at trying to improve the recognizers came from reading Tomas Petricek's book Real-World Functional Programming. In the book, he shows a simple function for "blurring" an array:

There's a lot going on here, and I won't walk through it. But this takes a array of values and then averages each item with its neighbors.

Here's an example that creates an array of random values and then runs it through the "blurArray" function:

If we look at the output, the first array is a set of random numbers. The second output shows the result of running it through our blur function one time.

The last result shows the result of running through the blur function three times. And we can see that the values get "smoother" (or "blurrier") with each step.

Applying Blur to the Digit Recognizer

When I saw this, I thought of the digit recognition problem. Our data was simply an array of numbers. What would happen if I ran a similar "blur" over the digit data?

Note: this code is available in the "BlurClassifier" branch of the "digit-display" project on GitHub: jeremybytes/digit-display "Blur Classifier".

The reason I thought of this is because the current algorithms are doing strict comparisons between 2 images (one pixel at a time). But if the images are offset (meaning translated horizontally or vertically by several pixels), then the current recognizers would not pick it up. If I added a "blur", then it's possible that it would account for situations like this.

Blurring the Data

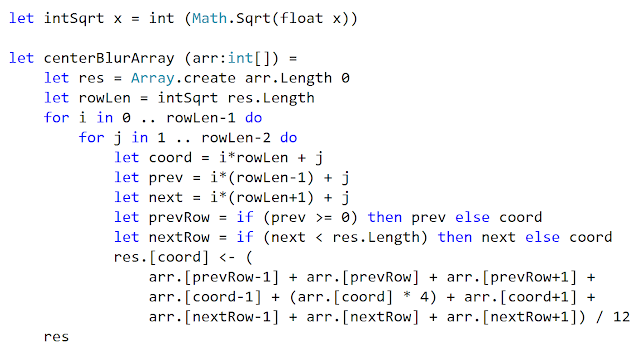

Here's my function to blur the data that we have:

This is a bit more complex than the function we have above. That's because we're really dealing with 2-dimensional data. Each pixel has 8 adjacent pixels (including the row above and below).

I won't go into the details here. I skipped over the edges to make things a bit simpler, and I also weighted the "center" pixel so that it was averaged in 4 times more than the other pixels.

The New Distance Function

With this in place, I could create a new "distance" function:

This takes 2 pixel arrays, blurs them, and then passes them to our Manhattan Distance function that we already have in place. This means that we can do a direct comparison between our Manhattan Distance recognizer and our new Blur Distance recognizer.

The Results

Unfortunately, the results were less than stellar. Here's the output using our Digit Display application:

Note: When comparing the results, the numbers aren't in the same order due to the parallelization in the application. But they should be in the same general area in both sets of data.

There is both good and bad in the results. The good news is that we correctly identified several of the digits that the Manhattan Classifier got wrong.

The bad news is that there are new errors that the original classifier got right. But even with the new errors, it didn't perform any "worse" overall than the original. That tells me that there may be some good things that we can grab from this technique.

But now let's look at another approach.

Adding Some Weight

The other idea that I came up with had to do with how the "best" match was selected. Here's the basic function:

This runs the "distance" function (the "dist" right in the middle) to compare our target item against every item in the training set. In the distance calculation, smaller is better, so this just takes the smallest one that it can find.

But the "best" match isn't always the correct one. So I came up with the idea of looking at the 5 closest matches to come up with a consensus.

Note: this code is available in the "WeightedClassification" branch of the "digit-display" project on GitHub: jeremybytes/digit-display "Weighted Classification".

This has quite a few steps to it. There's probably a much shorter way of doing this, but this makes it easy to run step-by-step using F# Interactive.

Instead of pulling the smallest value (using "minBy" in the original), it gets the 5 smallest values. It looks something like this (there's some bits left out to make it more readable):

Then it counts up how many of each value. In this case, we have three 6s and two 5s. Then it pulled out the one with the most values in the list. (And 6 is correct in this case.)

To put this into the application, I composed the functions a bit differently to come up with a "weighted" classifier that still used the Manhattan Distance.

The results were not very good:

This actually makes things less accurate overall. But there are some promising items by looking at these results.

First, several of the items that the standard Manhattan Classifier got wrong were correctly identified by the weighted classifier. This did reinforce that the smallest number was not always the correct number.

But there were also a lot of items that this new classifier identified incorrectly. So overall, the performance was worse than the original.

More Refinement

Although this looks like a failure, I think I'm actually headed in the right direction. One thing that I can do to make this more accurate is to add a true "weight" to the calculation. Here's another example from our current approach:

If we look at these values, the distance calculations are fairly close together (within about 1500 of each other.) In this case, we can pretty confidently take the one with the "most" values (which is 2 in this case).

But compare that to this:

Here we have a much bigger gap between our best value and our worst value (over 5000). And there is even a big gap between the first value and the next best value (over 4000). Because of this, I really want to weight the first value higher. A simple consensus doesn't work in this case (especially since we have a "tie").

So even though we get worse results with the current implementation, I think this really shows some promise.

If I can add some "weight" to each value (rather than simply counting them), I think it can improve the accuracy by eliminating some of the outliers in the data.

Wrap Up

I really like having the visualization for what the machine-learning algorithms are doing. This gives me a good idea of where things are going right and where they are going wrong. This is not something that I could get just from looking at "percentage correct" values.

These two approaches to improving the results didn't have the intended effect. But because we could see where they went right and where they went wrong, it's possible to refine these into something better.

I'll be working on adding actual weights to the weighted classifier. I think this holds the most promise right now. And maybe adding a bit of "blur" will help as well. More experimentation is needed. That means more fun for me to explore!

Happy Coding!

No comments:

Post a Comment