I think that Smart Unit Tests is a really cool technology. And it's been interesting to explore different aspects of it. I really want this to work for me (and I'm even listening to the PEX team talk about it on .NET Rocks! as I write this). Unfortunately, I think I've come to the conclusion that it doesn't fit into my workflow.

Before I get to my reasons for this conclusion, let's look at another example that I was playing with earlier today.

[You can get all of the articles in the series here: Exploring Smart Unit Tests (Preview)]

[Update: Smart Unit Tests has been released as IntelliTest with Visual Studio 2015 Enterprise. My experience with IntelliTest is largely the same as with the preview version as shown in this article.]

Testing a Value Converter

For this exploration, I looked at a value converter. These are common in the XAML world (and one of the cool things about data binding in XAML).

Here's what a value converter looks like in it's native state:

This allows us to data bind a "DateTime" object to a UI element that is expecting a "Brush". Here's a screenshot that shows this in action:

The background color of each of our list items is databound to the "StartDate" property of our "Person" object. Based on the date (or more specifically, the decade), we show a particular color.

Now I didn't expect to be able to test this "Convert" method directly just because of the complexity of the parameters. So, I created a simpler method. This takes a "Person" as a parameter and returns a "SolidColorBrush":

When we run Smart Unit Tests against this method, we run into a bit of a problem:

The first test is a genuine failure: we are not checking for a "null" in our parameter. But the rest of our tests generate "InvalidProgramException".

Let's look at the detail of one of these items:

This shows that there is a problem when running the tests. When we click into this, we see that the problem statement is where we say "new SolidColorBrush()". I'm not sure why there is a problem here. And I'm also not sure what we need to do to get the Smart Unit Tests to run as expected.

Just to make sure I didn't create some type of run-time error in this code, I created a little console app to test the code. I added the appropriate assembly references (as I did with the class library) since the "SolidColorBrush" is part of the System.Windows.Media namespace.

Here's the console application:

And here's the output:

So, I know that the code itself works. This means that there might be a problem with Smart Unit Tests or the Visual Studio 2015 Preview or the way that it makes its calls into the various assemblies. Again, we're dealing with a preview version, and I expect these types of issues to be resolved prior to release.

A Simpler Method

Since it seems like there's a problem with the "SolidColorBrush" statement, let's remove that dependency. Instead of returning a "SolidColorBrush", we'll just return a "string" to represent an HTML color.

Here's the new method (I just picked colors at random; I didn't try to match them to the colors in the other method):

When we run Smart Unit Tests against this method, we get a better outcome:

This is a much better set of tests (without exceptions). As noted above, Test #1 is sending in a "null" for the parameter. Since our method does not check for null, it generates an exception. This is exactly what we expect, and we probably want to put a guard clause into this method to prevent this.

The tests generate real "Person" objects for the parameters. And as we can see, it's smart enough to generate objects that exercise each branch of our code.

Let's focus on the "StartDate" properties:

Here we can see that DateTime.MinValue and DateTime.MaxValue are both tested. The rest of our tests have random(ish) dates that fall within the decades that will hit each of our branches in the switch statement. So we have "1972-12-31" which will hit the "1970" branch; "1984-12-31" will hit the "1980" branch; "1992-12-31" will hit the "1990" branch; and "2000-12-31" will hit the "2000" branch.

So this is pretty cool. Even when we have an object with multiple properties as a parameter, Smart Unit Tests figures out what the important parts of that object are in order to exercise the code.

The Verdict

I really want to be able to incorporate Smart Unit Tests into my workflow -- primarily because it's such a cool technology. But ultimately, I don't think I'll be able to use Smart Unit Tests on a regular basis.

Testing Legacy Code

The most compelling reason for Smart Unit Tests is to create tests for legacy code so that we have a good idea of what the code is doing right now.

But here's the problem: If legacy code is difficult to understand (by a human), then it probably is not easily testable -- either due to large methods, shared state, tight coupling, or complex dependencies.

If the code is in a state where it has small easy-to-understand methods that are fairly atomic. Then I can probably understand what it's doing pretty easily. It would still be great to generate tests for this code. But in the world that I've dealt with, I've rarely seen code like this in a legacy code base.

The legacy code that I've dealt with is generally the "difficult to test" kind. In that case, I'm digging out my copy of Working Effectively with Legacy Code by Michael C. Feather's (Jeremy's Review). Smart Unit Tests are not going to be able to help me out there.

Testing Green Field Code

Okay, so maybe Smart Unit Tests won't work well with the legacy code that I deal with, but what about the green field code that I write?

That's really the blockade that I ran into yesterday. This is typical OO-based view model code. And Smart Unit Tests was not much of a help here. The dependencies are well separated (meaning, the view model and services are loosely coupled), and the class is testable (as we saw with the existing unit tests), but Smart Unit Tests was not able to easily create a set of tests here.

I would love to have those tests auto-generated so that I could fill in the gaps in my existing tests. But I do not want to go through the effort of creating a bunch of scaffolding so that Smart Unit Tests can do that for me. I feel that I'm better off spending my time enhancing my existing tests.

Where Smart Unit Tests Excels

Smart Unit Tests does very well with atomic, functional-style methods. We saw this when we started by looking at a method on Conway's Game of Life. But as mentioned previously, these methods are generally easy to test anyway (at least compared to tests that require specific starting states and ending states with OO).

Ultimately, C# is an OO language. It has a lot of functional features that have been added (and I really love those features). But we can't ignore its basis. If we want to do pure functional development, then we really should be using a functional-first language (like F#) rather than an OO-first language (like C#).

Wrap Up

Smart Unit Tests is a really cool technology. Unfortunately, it is of limited usefulness in my own workflow. I'll be keeping an eye on Smart Unit Tests to see how things change and evolve in the future. And I would encourage you to take it out for a spin for yourself. You may find that it fits in just fine with the type of code that you normally deal with.

I've been exploring different testing technologies and techniques for several years now. And I like to try out new things. What I've found through this exploration is a testing methodology that works for me -- makes me more efficient, faster, and more confident in my coding.

Be sure to explore different technologies and techniques for yourself. What works well for me may not work for you. So experiment and find what makes you most effective in your development process.

Happy Coding!

Tuesday, November 25, 2014

Monday, November 24, 2014

Yet More Smart Unit Tests (Preview): Fix and Roadblock

I've been exploring some more with Smart Unit Tests (preview). Last time, we looked at how we could mark exceptions in unit tests as expected by using the "Allow" option. This time, we'll look at the "Fix" option.

The articles in this series are all collected here: Exploring Smart Unit Tests (Preview)

[Update: Smart Unit Tests has been released as IntelliTest with Visual Studio 2015 Enterprise. My experience with IntelliTest is largely the same as with the preview version as shown in this article.]

Existing Code

As I mentioned previously, one of the best uses I can see for Smart Unit Tests is to run it against existing code to either generate a baseline set of tests or to fill in gaps of the existing tests. So, I decided to do some experimentation with some existing code that I had.

This is the sample project that I use in my presentation "Clean Code: Homicidal Maniacs Read Code, Too!". This code has a suite of unit tests already in place, and we use those tests as a safety net when we refactor the code. You can see a video of that refactoring on my YouTube channel: Clean Code: The Refactoring Bits.

This is a view model in a project that uses the MVVM design pattern. This means it contains the presentation logic for a form, and we have a set of 19 tests already in place:

Here's the "Initialize" method for this class:

We won't go into details too deeply here (you can get that from the video). The short version is that we have a couple of helper methods to get objects out of our dependency injection container. In this case, we are using the Service Locator pattern (which is considered an anti-pattern by some). This code is based on a real application, and we'll see a bit of funkiness as we go along.

To see a sample test, let's look at the 2nd line. Here, we get a model from our DI container and assign it to the backing field of our "Model" property. The test for this is pretty straight forward:

In our test, we create an instance of our view model and pass in our DI container as a parameter (we'll see how this gets initialized in a bit). Then we call the "Initialize" method and make sure that the "Model" property is not null.

We initialize our DI container in the test setup:

This creates an instance of our DI container (which is a UnityContainer) and assigns it to our class-level "_container" field. At the bottom of this method, we call into different methods to populate the PersonService (our service) and CurrentOrder (our model) in the container. These are split into separate methods so that we can easily put together different test scenarios -- for example, by registering a service that throws an exception to see how that is handled by the view model.

Here is the where we create a mock of the service:

This is a bit complex. This is because our service (represented by the "IPersonService" interface) uses the Asynchronous Programming Model (or APM). This is a pattern where we have pairs of "xxxBegin" and "xxxEnd" methods. This makes mocking and testing a bit interesting, and this is why we have the "IAsyncResult" types.

At the very bottom of this method, we put the mock service into the DI container with the "RegisterInstance" method.

Running Smart Unit Tests

So, we have a not-very-simple class. But at the same time, we do have reasonable isolation of our dependencies so that we can replace them in our tests.

Let's see how Smart Unit Tests responds when we run against the "Initialize" method. As a reminder, here's the method:

When we right-click on the method and choose "Smart Unit Tests", we get the following results:

What we get is a number of warnings. For this list, I selected the "Object Creation" issues. As we see, Smart Unit Tests is having some problems creating instances of our objects.

Here are the details for the first item:

This tells us that Smart Unit Tests had to take its "best guess" of how to create our class. Notice that we get a hint: "Click 'Fix' to Accept/Edit Factory."

Applying "Fix"

Let's give this a try. But instead of doing this on our CatalogViewModel object, I'll do it on the UnityContainer object (our DI container) since it will be a bit easier to initialize.

Just like when we chose "Allow" in the last article, when we choose "Fix", it will create a new project in our solution and take us to the code we can modify.

Here is the factory method that we get by default:

This simply creates the UnityContainer object, but it doesn't initialize it at all like we are doing in our hand-written tests.

One thing I found a bit interesting (that has absolutely nothing to do with what we're talking about) was the "sad robot" parameter:

But what's more important is that we look at the comments.

This tells us that we need to fill in the blanks to handle the different ways that we can initialize our container. For this, I brought in some of the code that I had in the original unit tests to mock up the service and mock up the model. Then I added some parameters so that we could run tests with either of these objects included or not included (to test our error states).

Rather than creating separate methods, I just in-lined the code (to try to get things working -- we can always refactor it later).

A Bit of a Roadblock

If we re-run the Smart Unit Tests, we'll see that the warning that we "Fix"ed is now gone. We can do the same type of thing for our other methods as well. Unfortunately, I ran into a bit of a roadblock as I was doing this.

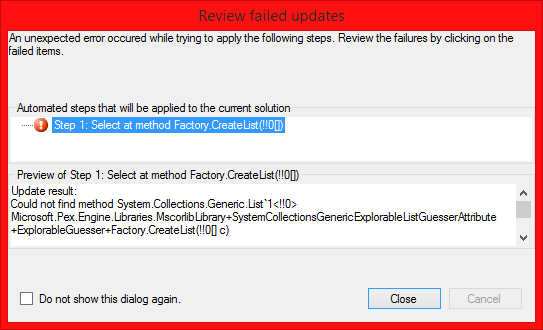

For the last item "Could not create an instance of System.Collections.Generics.List`1 <Common.Person>", when I tried to "Fix" this, I got an error:

I wish it had been able to generate a factory method stub for me, because this would have been fairly easy for me to implement. In fact, we created a list to use for testing earlier:

This error is a reminder that we are still dealing with a preview release here. There are still a few bugs to be worked out.

A Bigger Issue

As mentioned earlier, I'm still trying to figure out if Smart Unit Tests has a place in my workflow for building applications. Unfortunately, this particular experience is leading me away from Smart Unit Tests.

Object Initialization

This particular class is already fairly well tested; there are a few scenarios that aren't covered, but it's mostly there. If I do need to spend effort in putting together mocks and test factories, then I might as well write the tests manually. This lets me think in my normal way of testing rather than having me try to put together code that is conducive to the computer generating the tests.

Parameterization

The other concern that I have regarding testing this particular class is that it is a view model. View models are generally state driven (by the properties) and have a number of methods that operate on those properties. This is normal in the object-oriented programming world -- it's really the main reason we might want to use OO.

But Smart Unit Tests works primarily by coming up with parameters that will exercise the various code paths. How does it react when it walks up to a class with very few parameterized methods?

Here are the public methods for the CatalogViewModel class:

Notice that the constructor takes a parameter (our DI container), and most of the other methods don't have parameters. The 2 methods that take "object person" as a parameter are actually a bit of a problem, too. Inside the method, the parameter is immediately cast from "object" to "Person". The reason we have "object" as a parameter is to make it easier to interact with the XAML UI. It's not the best solution, but it generally works. So, in order to test these methods, we really need to be supplying a "Person" as a parameter rather than simply an "object". This could be difficult for Smart Unit Tests to figure out (and I never quite got that far in this case).

Functional Programming

It seems like Smart Unit Tests would really excel in functional-style programming where we are passing in discrete parameters and returning discrete results. This is much different from testing object state. And we saw this in action when we tested our code for Conway's Game of Life which had these functional characteristics.

As a non-functional example, in the hand-written test, we call the "Initialize" method and then check the state of the "Model" property. This is not based on parameters or return values. The changes are based on the state of the object, including both the "container" field and the "Model" property.

Functional-style methods are extremely easy to test (since they don't deal with mutable object state or have weird internal dependencies). Being able to auto-generate tests for functional-style methods is nice to have, but I'm not sure how needed it is.

Wrap Up

I will be the first to admit that I may be missing something really big when it comes to Smart Unit Tests. Feel free to leave comments if you have any ideas or insights; I'd like to get some additional thoughts on the topic.

I really like the idea of having tests that exercise every code path. I like the idea of being able to "fill in the gaps" in my current tests. I like the idea of being able to generate tests against legacy code.

But if it turns out that I need to put a bit of effort into factory methods and mocking in order to get the tests to auto-generate, I'd rather put that effort into just creating the tests myself. As I mentioned, I'd rather build tests in a way that I (a human) understands than spend time to build test scaffolding that an automated-testing system (a computer) understands.

I'll be exploring Smart Unit Tests further (including looking at "Fix" with simpler dependencies). And I'll be sure to share any breakthroughs or "a-ha" moments.

Happy Coding!

The articles in this series are all collected here: Exploring Smart Unit Tests (Preview)

[Update: Smart Unit Tests has been released as IntelliTest with Visual Studio 2015 Enterprise. My experience with IntelliTest is largely the same as with the preview version as shown in this article.]

Existing Code

As I mentioned previously, one of the best uses I can see for Smart Unit Tests is to run it against existing code to either generate a baseline set of tests or to fill in gaps of the existing tests. So, I decided to do some experimentation with some existing code that I had.

This is the sample project that I use in my presentation "Clean Code: Homicidal Maniacs Read Code, Too!". This code has a suite of unit tests already in place, and we use those tests as a safety net when we refactor the code. You can see a video of that refactoring on my YouTube channel: Clean Code: The Refactoring Bits.

This is a view model in a project that uses the MVVM design pattern. This means it contains the presentation logic for a form, and we have a set of 19 tests already in place:

Here's the "Initialize" method for this class:

We won't go into details too deeply here (you can get that from the video). The short version is that we have a couple of helper methods to get objects out of our dependency injection container. In this case, we are using the Service Locator pattern (which is considered an anti-pattern by some). This code is based on a real application, and we'll see a bit of funkiness as we go along.

To see a sample test, let's look at the 2nd line. Here, we get a model from our DI container and assign it to the backing field of our "Model" property. The test for this is pretty straight forward:

In our test, we create an instance of our view model and pass in our DI container as a parameter (we'll see how this gets initialized in a bit). Then we call the "Initialize" method and make sure that the "Model" property is not null.

We initialize our DI container in the test setup:

This creates an instance of our DI container (which is a UnityContainer) and assigns it to our class-level "_container" field. At the bottom of this method, we call into different methods to populate the PersonService (our service) and CurrentOrder (our model) in the container. These are split into separate methods so that we can easily put together different test scenarios -- for example, by registering a service that throws an exception to see how that is handled by the view model.

Here is the where we create a mock of the service:

This is a bit complex. This is because our service (represented by the "IPersonService" interface) uses the Asynchronous Programming Model (or APM). This is a pattern where we have pairs of "xxxBegin" and "xxxEnd" methods. This makes mocking and testing a bit interesting, and this is why we have the "IAsyncResult" types.

At the very bottom of this method, we put the mock service into the DI container with the "RegisterInstance" method.

Running Smart Unit Tests

So, we have a not-very-simple class. But at the same time, we do have reasonable isolation of our dependencies so that we can replace them in our tests.

Let's see how Smart Unit Tests responds when we run against the "Initialize" method. As a reminder, here's the method:

When we right-click on the method and choose "Smart Unit Tests", we get the following results:

What we get is a number of warnings. For this list, I selected the "Object Creation" issues. As we see, Smart Unit Tests is having some problems creating instances of our objects.

Here are the details for the first item:

This tells us that Smart Unit Tests had to take its "best guess" of how to create our class. Notice that we get a hint: "Click 'Fix' to Accept/Edit Factory."

Applying "Fix"

Let's give this a try. But instead of doing this on our CatalogViewModel object, I'll do it on the UnityContainer object (our DI container) since it will be a bit easier to initialize.

Just like when we chose "Allow" in the last article, when we choose "Fix", it will create a new project in our solution and take us to the code we can modify.

Here is the factory method that we get by default:

This simply creates the UnityContainer object, but it doesn't initialize it at all like we are doing in our hand-written tests.

One thing I found a bit interesting (that has absolutely nothing to do with what we're talking about) was the "sad robot" parameter:

But what's more important is that we look at the comments.

This tells us that we need to fill in the blanks to handle the different ways that we can initialize our container. For this, I brought in some of the code that I had in the original unit tests to mock up the service and mock up the model. Then I added some parameters so that we could run tests with either of these objects included or not included (to test our error states).

Rather than creating separate methods, I just in-lined the code (to try to get things working -- we can always refactor it later).

A Bit of a Roadblock

If we re-run the Smart Unit Tests, we'll see that the warning that we "Fix"ed is now gone. We can do the same type of thing for our other methods as well. Unfortunately, I ran into a bit of a roadblock as I was doing this.

For the last item "Could not create an instance of System.Collections.Generics.List`1 <Common.Person>", when I tried to "Fix" this, I got an error:

I wish it had been able to generate a factory method stub for me, because this would have been fairly easy for me to implement. In fact, we created a list to use for testing earlier:

This error is a reminder that we are still dealing with a preview release here. There are still a few bugs to be worked out.

A Bigger Issue

As mentioned earlier, I'm still trying to figure out if Smart Unit Tests has a place in my workflow for building applications. Unfortunately, this particular experience is leading me away from Smart Unit Tests.

Object Initialization

This particular class is already fairly well tested; there are a few scenarios that aren't covered, but it's mostly there. If I do need to spend effort in putting together mocks and test factories, then I might as well write the tests manually. This lets me think in my normal way of testing rather than having me try to put together code that is conducive to the computer generating the tests.

Parameterization

The other concern that I have regarding testing this particular class is that it is a view model. View models are generally state driven (by the properties) and have a number of methods that operate on those properties. This is normal in the object-oriented programming world -- it's really the main reason we might want to use OO.

But Smart Unit Tests works primarily by coming up with parameters that will exercise the various code paths. How does it react when it walks up to a class with very few parameterized methods?

Here are the public methods for the CatalogViewModel class:

Notice that the constructor takes a parameter (our DI container), and most of the other methods don't have parameters. The 2 methods that take "object person" as a parameter are actually a bit of a problem, too. Inside the method, the parameter is immediately cast from "object" to "Person". The reason we have "object" as a parameter is to make it easier to interact with the XAML UI. It's not the best solution, but it generally works. So, in order to test these methods, we really need to be supplying a "Person" as a parameter rather than simply an "object". This could be difficult for Smart Unit Tests to figure out (and I never quite got that far in this case).

Functional Programming

It seems like Smart Unit Tests would really excel in functional-style programming where we are passing in discrete parameters and returning discrete results. This is much different from testing object state. And we saw this in action when we tested our code for Conway's Game of Life which had these functional characteristics.

As a non-functional example, in the hand-written test, we call the "Initialize" method and then check the state of the "Model" property. This is not based on parameters or return values. The changes are based on the state of the object, including both the "container" field and the "Model" property.

Functional-style methods are extremely easy to test (since they don't deal with mutable object state or have weird internal dependencies). Being able to auto-generate tests for functional-style methods is nice to have, but I'm not sure how needed it is.

Wrap Up

I will be the first to admit that I may be missing something really big when it comes to Smart Unit Tests. Feel free to leave comments if you have any ideas or insights; I'd like to get some additional thoughts on the topic.

I really like the idea of having tests that exercise every code path. I like the idea of being able to "fill in the gaps" in my current tests. I like the idea of being able to generate tests against legacy code.

But if it turns out that I need to put a bit of effort into factory methods and mocking in order to get the tests to auto-generate, I'd rather put that effort into just creating the tests myself. As I mentioned, I'd rather build tests in a way that I (a human) understands than spend time to build test scaffolding that an automated-testing system (a computer) understands.

I'll be exploring Smart Unit Tests further (including looking at "Fix" with simpler dependencies). And I'll be sure to share any breakthroughs or "a-ha" moments.

Happy Coding!

Sunday, November 23, 2014

Property Injection - Additional Unit Tests

I really like to talk about Dependency Injection (whether in live presentations or on Pluralsight). I recently got a question about how to do additional testing on the ServiceRepository object.

Here's the question:

The Existing Tests

Let's look at an existing test. This code can be downloaded here: Dependency Injection - A Practical Introduction.

Here's the setup:

The primary objective of this code is to create a mock object of our "IPersonService" (our SOAP service) that we can use when we are testing the "ServiceRepository" object. We're using Moq for this. The short version is that when someone calls the "GetPeople" method of the mock service, we would like to return the "people" list that has our 2 test records.

And here's an existing test for the "GetPeople" method:

This uses property injection to inject the "IPersonService" into the repository through the "ServiceProxy" property. We talked about property injection in detail in a previous article, so we won't go into that again here. As we can tell from our assertions, we're expecting to have 2 records returned by our method call (the same 2 test records we created in the setup).

Testing the AddPerson Method

To test the "AddPerson" method of the repository, we want to verify that the "AddPerson" method on the *service* is called. This is a slightly different approach than was proposed above, and we'll take a look at why I chose this path in just a bit.

In order to do this verification test, we're going to take advantage of a feature in Moq. But if we want to use this in our tests, we need access to the mock object itself in the test (which we don't currently have).

Here's our updated test setup:

Notice that we've changed the class-level variable. Instead of "IPersonService _service", we have "Mock<IPersonService> _serviceMock". The rest of our setup code is mostly the same. We're still mocking up the "GetPeople" and "GetPerson" methods. And notice that we haven't added anything special for the "AddPerson" method to the mock object.

Update the Existing Tests

Because we changed our class-level variable, we need to also modify the existing tests:

The difference is in the second line of our test. Instead of assigning "_service" to the "ServiceProxy" property, we assign "_serviceMock.Object". This pulls the "IPersonService" object out of the mock (and this is what we were doing in the original test setup to assign the class-level variable).

The AddPerson Test

So let's take a look at how we will test the "AddPerson" method:

Let's walk through this. In the "Arrange" section, we create an instance of the repository, inject the mock service through the property, and then create a new Person object to use as a parameter.

In the "Act" section, we call the "AddPerson" method with the new Person object. When the "AddPerson" in the repository is called, we expect that the "AddPerson" method in the service will be called.

And in the "Assert" section that is exactly what we check for. Our mock object has a "Verify" method that let's us check to see what methods were called, what parameters they are called with, and how many times they are called.

In this case, we want to verify that "AddPerson" was called on our mock object with the "newPerson" parameter. The "Times.Once()" option specifies that we expect this method was called one time (and only one time). There are lots of other cool options such as "Times.AtLeastOnce()" and "Times.Never()").

We can use this same approach to test our update and delete methods.

Approaches to Testing the Add Method

This approach is a bit different than was proposed in the question. That proposal included calling "AddPerson" and then calling "GetPeople" to verify that the new person was added to the data.

But we didn't check this at all. Why not?

Well, this has to do with what object we are testing. If we were testing the service (i.e. the data store), then we would want to verify that the new Person was actually persisted to our data storage layer. But we aren't testing the service here, we are testing the repository.

To test the repository, we want to make sure that we make the proper calls into the service, but we don't need to check that the service is doing what we expect (at least not here -- hopefully we have separate tests to verify the service does what we expect).

Why Did We Test the GetPeople Method Differently?

When we tested the "GetPeople" method, we created test data and configured the mock object to return that data. This is a different level of effort than we went through for "AddPeople". The reason is that "GetPeople" has a return value. In order to check the return value, we had to mock up the object to return a test value.

But the "AddPeople" method on the repository returns void. This means that we do not need to mock up a return value. Instead, we expect some sort of action to be done. In this case, we expect that the action is calling the "AddPeople" method of the service (which also returns void). So all we need to do is verify that the "AddPeople" call is made on the service.

A More Complex Approach

There is another approach that we could take. Instead of using a mock object, we could create a test object. This is simply a test class that implements the "IPersonService" interface. This class could have the test data built in to an internal list, and the CRUD operations could operate on this data.

So the "AddPerson" method would add a record to the test data, and "GetPeople" would return all of the test data including the new record.

This seems like a pretty reasonable approach. But with unit tests, we're testing pieces of code in isolation. As mentioned above, if we check the "GetPeople" method to make sure we get the new record, we're really testing the data storage part of the application (as opposed to just the repository part).

Wrap Up

There are a lot of different approaches to unit testing. So if you are using a full test object, don't feel like you're "doing it wrong". I take a practical approach: if the tests are valid, usable, and understandable, then use whatever works best for you.

In my experience, I've been successful with paring down unit tests to small pieces of functionality. In addition, I only test the publicly-exposed parts of my classes. If I feel the need to test an internal member, then I usually take that as a sign that I need to split out the functionality into another object. But that's a story for another day.

I have found unit tests to be invaluable in my workflow of building applications -- especially since I generally deal with applications with interactive user interfaces. The more things I can test, the less frequently I need to run through the UI of the application to test features, and the more confidently I can proceed with my coding.

Happy Coding!

Here's the question:

...Can you share some code that would unit test the AddPerson method in the ServiceRepository? What I've been trying to do on my own unit test of AddPerson is to:When I first looked at the question, this looked like the approach that I would take. But as I've said before, I'm a bit of a slower thinker. After some more thought, I came up with a slightly different approach.

In test setup:

1. Create a dummy list with two Person objects

2. Use Moq to mock up the IPersonService and setup GetAllPeople to return the dummy list

In the AddPeople Unit Test:

3. Create a new Person object

4. Create an instance of the ServiceRepository

5. Set the ServiceProxy property of the ServiceRepository to the mocked IPersonService

6. Call the GetAllPeople which will presumably return the dummy list

7. Assert that there are only 2 Person objects in the list

8. Call AddPerson method on the ServiceRepository

9. Assert that there are now 3 objects in the dummy list

Is this the right approach or am I completely off target?

The Existing Tests

Let's look at an existing test. This code can be downloaded here: Dependency Injection - A Practical Introduction.

Here's the setup:

The primary objective of this code is to create a mock object of our "IPersonService" (our SOAP service) that we can use when we are testing the "ServiceRepository" object. We're using Moq for this. The short version is that when someone calls the "GetPeople" method of the mock service, we would like to return the "people" list that has our 2 test records.

And here's an existing test for the "GetPeople" method:

This uses property injection to inject the "IPersonService" into the repository through the "ServiceProxy" property. We talked about property injection in detail in a previous article, so we won't go into that again here. As we can tell from our assertions, we're expecting to have 2 records returned by our method call (the same 2 test records we created in the setup).

Testing the AddPerson Method

To test the "AddPerson" method of the repository, we want to verify that the "AddPerson" method on the *service* is called. This is a slightly different approach than was proposed above, and we'll take a look at why I chose this path in just a bit.

In order to do this verification test, we're going to take advantage of a feature in Moq. But if we want to use this in our tests, we need access to the mock object itself in the test (which we don't currently have).

Here's our updated test setup:

Notice that we've changed the class-level variable. Instead of "IPersonService _service", we have "Mock<IPersonService> _serviceMock". The rest of our setup code is mostly the same. We're still mocking up the "GetPeople" and "GetPerson" methods. And notice that we haven't added anything special for the "AddPerson" method to the mock object.

Update the Existing Tests

Because we changed our class-level variable, we need to also modify the existing tests:

The difference is in the second line of our test. Instead of assigning "_service" to the "ServiceProxy" property, we assign "_serviceMock.Object". This pulls the "IPersonService" object out of the mock (and this is what we were doing in the original test setup to assign the class-level variable).

The AddPerson Test

So let's take a look at how we will test the "AddPerson" method:

Let's walk through this. In the "Arrange" section, we create an instance of the repository, inject the mock service through the property, and then create a new Person object to use as a parameter.

In the "Act" section, we call the "AddPerson" method with the new Person object. When the "AddPerson" in the repository is called, we expect that the "AddPerson" method in the service will be called.

And in the "Assert" section that is exactly what we check for. Our mock object has a "Verify" method that let's us check to see what methods were called, what parameters they are called with, and how many times they are called.

In this case, we want to verify that "AddPerson" was called on our mock object with the "newPerson" parameter. The "Times.Once()" option specifies that we expect this method was called one time (and only one time). There are lots of other cool options such as "Times.AtLeastOnce()" and "Times.Never()").

We can use this same approach to test our update and delete methods.

Approaches to Testing the Add Method

This approach is a bit different than was proposed in the question. That proposal included calling "AddPerson" and then calling "GetPeople" to verify that the new person was added to the data.

But we didn't check this at all. Why not?

Well, this has to do with what object we are testing. If we were testing the service (i.e. the data store), then we would want to verify that the new Person was actually persisted to our data storage layer. But we aren't testing the service here, we are testing the repository.

To test the repository, we want to make sure that we make the proper calls into the service, but we don't need to check that the service is doing what we expect (at least not here -- hopefully we have separate tests to verify the service does what we expect).

Why Did We Test the GetPeople Method Differently?

When we tested the "GetPeople" method, we created test data and configured the mock object to return that data. This is a different level of effort than we went through for "AddPeople". The reason is that "GetPeople" has a return value. In order to check the return value, we had to mock up the object to return a test value.

But the "AddPeople" method on the repository returns void. This means that we do not need to mock up a return value. Instead, we expect some sort of action to be done. In this case, we expect that the action is calling the "AddPeople" method of the service (which also returns void). So all we need to do is verify that the "AddPeople" call is made on the service.

A More Complex Approach

There is another approach that we could take. Instead of using a mock object, we could create a test object. This is simply a test class that implements the "IPersonService" interface. This class could have the test data built in to an internal list, and the CRUD operations could operate on this data.

So the "AddPerson" method would add a record to the test data, and "GetPeople" would return all of the test data including the new record.

This seems like a pretty reasonable approach. But with unit tests, we're testing pieces of code in isolation. As mentioned above, if we check the "GetPeople" method to make sure we get the new record, we're really testing the data storage part of the application (as opposed to just the repository part).

Wrap Up

There are a lot of different approaches to unit testing. So if you are using a full test object, don't feel like you're "doing it wrong". I take a practical approach: if the tests are valid, usable, and understandable, then use whatever works best for you.

In my experience, I've been successful with paring down unit tests to small pieces of functionality. In addition, I only test the publicly-exposed parts of my classes. If I feel the need to test an internal member, then I usually take that as a sign that I need to split out the functionality into another object. But that's a story for another day.

I have found unit tests to be invaluable in my workflow of building applications -- especially since I generally deal with applications with interactive user interfaces. The more things I can test, the less frequently I need to run through the UI of the application to test features, and the more confidently I can proceed with my coding.

Happy Coding!

Monday, November 17, 2014

Still More Smart Unit Tests (Preview): Exploring Exceptions

Last week I started an exploration of Smart Unit Tests (preview). The articles are collected here: Jeremy Bytes: Exploring Smart Unit Tests (Preview). In the last article, I added guard clauses to verify parameters to see how Smart Unit Tests reacts to it. I noted that the tests that generated the exceptions were still passing tests because they were annotated with the "ExpectedException" attribute.

I got a bit of clarification from Peli de Halleux (who is on the Pex team from Microsoft Research) about this behavior.

Let's explore this a bit more.

[Update: Smart Unit Tests has been released as IntelliTest with Visual Studio 2015 Enterprise. My experience with IntelliTest is largely the same as with the preview version as shown in this article.]

Something Other Than ArgumentException

I'll start by changing one of the guard clauses to a different exception type.

I changed the "ArgumentException" to "IndexOutOfRangeException". Now let's re-run Smart Unit Tests.

Now we see that we have a failing test. Test #7 uses "2" as the "currentState" (and as a reminder, this is outside the range of our enum).

And we can see that the test does throw an "IndexOutOfRangeException". But this time, it treats this as a failing test.

Here's the detail of the test when we click on it:

Notice the attributes: we have an attribute "PexRaisedException" which notes the exception that was raised, but we do not have an "ExpectedException" attribute in this case.

So what we can see here is that if the code throws something other than an ArgumentException (or something that inherits from it), the result is a failing test.

Now if we were not expecting this exception, this test gives us *great* information. It tells us we need to guard against the exception or catch it and make sure we handle it cleanly. So, this can give us some great insight into scenarios we may not have thought about.

But that's not what we have here. This exception is perfectly fine for this block of code.

Allowing the Exception

So we have a problem: we really want to allow this exception. (Side note: in a real application, I would keep this as some subtype of ArgumentException, but we're just using this as a sample case for an exception that we expect in our code.)

Fortunately, Smart Unit Test allows for this. If we right-click on the failing test, we get a number of options:

These options are also available in the tool bar. Let's see what happens when we choose "Allow" and rerun Smart Unit Tests.

Now our test passes (Test #7). Here's what we see when we click on the details:

The attributes have been updated. Now we *do* have an "ExpectedException" attribute that says we are expecting an "IndexOutOfRangeException".

A New Test Project

When we choose "Allow" on our test, that setting needs to be saved somewhere (this is also true if we select one of the other options: Save, Fix, or Suppress). These end up in a new test project that gets added to our solution automatically.

The project is called "Conway.Library.Tests" (since it is based on the "Conway.Library" project). And the test class is "LifeRulesTest" since it is based on the "LifeRules" class.

Here's what the class looks like:

Since we chose "Allow", there are some attributes that reflect that.

Now, as Peli noted, "ArgumentException" is allowed by default. This is the first "PexAllowedExceptionFromTypeUnderTest" attribute. And based on the attribute, we can see that in addition to "ArgumentException" we also allow any exceptions that inherit from that exception (so things like "ArgumentOutOfRangeException" and "ArgumentNullException").

We also have a note that "InvalidOperationException" is allowed as well. We have the option of changing these attributes if we want to.

And then we move down to the test itself. Notice that it is attributed with "PexAllowedException" for "IndexOutOfRangeException". This means that we will no longer get a "fail" if that type of exception is thrown for this test.

Peli was also nice enough to point me to a wiki with more details from the Pex project (which is what Smart Unit Tests comes out of). You can a description of the various attributes here: Pex - Automated Whitebox Testing for .NET : Allowing Exceptions.

Wrap Up

So we can see that Smart Unit Tests gives us control over what exceptions we consider to be acceptable in our code.

I've been playing a bit with the other options that we have on the tests as well (Save, Fix, and Suppress). "Save" is pretty obvious -- it saves off a copy of the tests so that we can re-run them later and even modify them if we want. "Fix" allows us to add manual code to places where Smart Unit Tests may not have been able to generate a complete test case. I'm exploring this right now, so you can look forward to more info in the future. I haven't used "Suppress" yet, but I'll give that a try as I continue my exploration.

Look forward for more to come.

Happy Coding!

I got a bit of clarification from Peli de Halleux (who is on the Pex team from Microsoft Research) about this behavior.

@jeremybytes Pex assumes that an ArgumentException coming from the type under test is expected; otherwise not

— Peli de Halleux (@pelikhan) November 18, 2014

This makes sense. Since Smart Unit Tests creates tests based on parameters, it makes sense that it treats ArgumentException different from other exception types since ArgumentException is likely to be thrown by things like guard clauses and code contracts that verify parameters.Let's explore this a bit more.

[Update: Smart Unit Tests has been released as IntelliTest with Visual Studio 2015 Enterprise. My experience with IntelliTest is largely the same as with the preview version as shown in this article.]

Something Other Than ArgumentException

I'll start by changing one of the guard clauses to a different exception type.

I changed the "ArgumentException" to "IndexOutOfRangeException". Now let's re-run Smart Unit Tests.

Now we see that we have a failing test. Test #7 uses "2" as the "currentState" (and as a reminder, this is outside the range of our enum).

And we can see that the test does throw an "IndexOutOfRangeException". But this time, it treats this as a failing test.

Here's the detail of the test when we click on it:

Notice the attributes: we have an attribute "PexRaisedException" which notes the exception that was raised, but we do not have an "ExpectedException" attribute in this case.

So what we can see here is that if the code throws something other than an ArgumentException (or something that inherits from it), the result is a failing test.

Now if we were not expecting this exception, this test gives us *great* information. It tells us we need to guard against the exception or catch it and make sure we handle it cleanly. So, this can give us some great insight into scenarios we may not have thought about.

But that's not what we have here. This exception is perfectly fine for this block of code.

Allowing the Exception

So we have a problem: we really want to allow this exception. (Side note: in a real application, I would keep this as some subtype of ArgumentException, but we're just using this as a sample case for an exception that we expect in our code.)

Fortunately, Smart Unit Test allows for this. If we right-click on the failing test, we get a number of options:

These options are also available in the tool bar. Let's see what happens when we choose "Allow" and rerun Smart Unit Tests.

Now our test passes (Test #7). Here's what we see when we click on the details:

The attributes have been updated. Now we *do* have an "ExpectedException" attribute that says we are expecting an "IndexOutOfRangeException".

A New Test Project

When we choose "Allow" on our test, that setting needs to be saved somewhere (this is also true if we select one of the other options: Save, Fix, or Suppress). These end up in a new test project that gets added to our solution automatically.

The project is called "Conway.Library.Tests" (since it is based on the "Conway.Library" project). And the test class is "LifeRulesTest" since it is based on the "LifeRules" class.

Here's what the class looks like:

Since we chose "Allow", there are some attributes that reflect that.

Now, as Peli noted, "ArgumentException" is allowed by default. This is the first "PexAllowedExceptionFromTypeUnderTest" attribute. And based on the attribute, we can see that in addition to "ArgumentException" we also allow any exceptions that inherit from that exception (so things like "ArgumentOutOfRangeException" and "ArgumentNullException").

We also have a note that "InvalidOperationException" is allowed as well. We have the option of changing these attributes if we want to.

And then we move down to the test itself. Notice that it is attributed with "PexAllowedException" for "IndexOutOfRangeException". This means that we will no longer get a "fail" if that type of exception is thrown for this test.

Peli was also nice enough to point me to a wiki with more details from the Pex project (which is what Smart Unit Tests comes out of). You can a description of the various attributes here: Pex - Automated Whitebox Testing for .NET : Allowing Exceptions.

Wrap Up

So we can see that Smart Unit Tests gives us control over what exceptions we consider to be acceptable in our code.

I've been playing a bit with the other options that we have on the tests as well (Save, Fix, and Suppress). "Save" is pretty obvious -- it saves off a copy of the tests so that we can re-run them later and even modify them if we want. "Fix" allows us to add manual code to places where Smart Unit Tests may not have been able to generate a complete test case. I'm exploring this right now, so you can look forward to more info in the future. I haven't used "Suppress" yet, but I'll give that a try as I continue my exploration.

Look forward for more to come.

Happy Coding!

Friday, November 14, 2014

More Smart Unit Tests (Preview): Guard Clauses

Yesterday we looked at the new Smart Unit Tests feature that is part of Visual Studio 2015 Preview. I couldn't resist doing a few more updates to my code to see how Smart Unit Tests would react. As a reminder, this is based on preview versions of the technology (from 11/12/2014).

[You can get links to all of the articles in the series on my website.]

[Update: Smart Unit Tests has been released as IntelliTest with Visual Studio 2015 Enterprise. My experience with IntelliTest is largely the same as with the preview version as shown in this article.]

Adding Guard Clauses

One thing that I mentioned yesterday is that we could add code contracts to put limitations on the parameter ranges, and Smart Unit Tests would pick up on that. So, I actually tried it.

Now I didn't use the "Contract" class/methods because I'm still a bit wary -- the classes are built in to the .NET Framework, but you need to download an IL re-writer from Microsoft Research in order for it to work. I'm hoping the re-writer shows up "in the box" one of these days (or gets integrated into the Roslyn compiler).

So instead of using code contracts, I added a couple of guard clauses to the method:

The first statement checks to make sure that the "liveNeighbors" parameter is within the valid range of 0 to 8 (inclusive). Otherwise, we throw an argument out-of-range exception.

The next statement checks to make sure that the value of the "currentState" parameter is valid for the enum. As a reminder, enums are just integers underneath, so the compiler will accept any value for them. Because of this, we really should verify that we're getting a valid value.

And we saw this yesterday. When we did *not* have the guard clauses, Smart Unit Tests created a test to see what would happen with a variable that was out of range:

Test #5 shows uses a "currentState" of "2". The valid values are "0" (Alive) and "1" (Dead). And because we didn't have any checks in our code, this returns a valid result (actually, an invalid result from a business perspective, but a valid result as far as the compiler is concerned).

Updated Smart Unit Tests

When we run Smart Unit Tests against the new method with the guard clauses, we end up with a different set of tests:

We still have the same tests (in a slightly different order), but we also have some new tests (and one test with a new result).

Just like we talked about yesterday, Smart Unit Tests goes through every code branch and generates tests with parameters to exercise those branches. The same is true for the guard clauses.

So, if we look at Test #3, we see a test with "8" as the "liveNeighbors" parameter. This is edge of the range for our first guard clause.

Then in Test #5, we see a test with "9" as the "liveNeighbors" parameter -- outside of the range of the guard clause. And notice the result: we get an ArgumentOutOfRangeException, and the error message matches what is generated by the method.

Is throwing an exception really considered a "passing" test? Absolutely. This is the behavior that we expect for an invalid parameter. Here's the actual test method that was generated:

Notice that there is an "ExpectedException" attribute. Normally a test that throws an exception results in a failure. But if we tell the testing framework that we expect to get an exception, then it becomes a "pass" -- in fact, it will fail if no exception is thrown.

Test #8 tests the other end of the first guard clause. It uses "int.MinValue" for the "less than 0" condition. And this generates the same exception we just looked at.

Finally, Test #7 looks at the value of our enum parameter. Before we added a guard clause, Smart Unit Tests did generate a test for this case -- "currentState = 2", but our method was not set up to catch this and returned a valid value.

But with the guard clause in place, this test now throws an exception.

Verifying Expectations

So it's interesting to see how Smart Unit Tests handles guard clauses. Just like our other code, it generates tests to exercise those code paths. And if there are exceptions, then it generates a test that assumes the exception is expected.

Again, these tests tell us what our code *actually* does not what it is *expected* to do. After generating these tests, it is vitally important that we analyze all of the generated tests and results. What we may find is that there is a test that throws an exception where we do *not* want an exception to be thrown. Smart Unit Tests does not know the intent of our code -- only what it actually does.

So, if our tests throw exceptions, we need to double check to make sure that it is the behavior that we want. Otherwise, we need to update our code and try again.

[Update 11/17/2014: Peli de Halleux from Microsoft Research was nice enough to send some clarification on how exceptions are handled by default. We explore this in the next article: Smart Unit Tests (Preview): Exploring Exceptions.]

Smart Unit Tests and Legacy Code

As I mentioned yesterday, I'm still trying to figure out how (or if) this technology fits into my workflow. There is one place where Smart Unit Tests is very compelling: working with legacy code.

If I walk up to an existing code base that does not have unit tests in place, I can run Smart Unit Tests against the code to generate an expected baseline behavior -- this is what the code does today. Then I can save off those tests and run them regularly as I make updates or refactor the code. These tests would let me know if I inadvertently changed existing behavior.

That is a very compelling use case for this technology.

Now there are also some questions when thinking about this scenario. If a legacy code base does not have tests (and maybe it's a bit of a mess as well), then it may be difficult to create tests due to dependencies between classes.

The example method that we've been using to generate tests is *extremely* simple. And it lends itself well to unit testing. It does not have dependencies on any external objects or methods. It only acts on the parameters being passed in. And it does not modify any shared state that affects other parts of the code. Because of this, it is very easy to test.

But when we get to more complex situations that may require dependency injection or mocking, it's not quite as easy to generate tests -- especially if the code is not loosely-coupled. (It looks like I'll be running Smart Unit Tests against different types of code to see how it reacts.)

Wrap Up

It will be very interesting to see how this technology evolves. We saw here that we can add guard clauses to our method and appropriate tests are added to exercise those code paths. I'm going to keep thinking about ways that I can integrate this technology into my programming workflow. It seems like there are a lot of possibilities out there.

Happy Coding!

[You can get links to all of the articles in the series on my website.]

[Update: Smart Unit Tests has been released as IntelliTest with Visual Studio 2015 Enterprise. My experience with IntelliTest is largely the same as with the preview version as shown in this article.]

Adding Guard Clauses

One thing that I mentioned yesterday is that we could add code contracts to put limitations on the parameter ranges, and Smart Unit Tests would pick up on that. So, I actually tried it.

Now I didn't use the "Contract" class/methods because I'm still a bit wary -- the classes are built in to the .NET Framework, but you need to download an IL re-writer from Microsoft Research in order for it to work. I'm hoping the re-writer shows up "in the box" one of these days (or gets integrated into the Roslyn compiler).

So instead of using code contracts, I added a couple of guard clauses to the method:

The first statement checks to make sure that the "liveNeighbors" parameter is within the valid range of 0 to 8 (inclusive). Otherwise, we throw an argument out-of-range exception.

The next statement checks to make sure that the value of the "currentState" parameter is valid for the enum. As a reminder, enums are just integers underneath, so the compiler will accept any value for them. Because of this, we really should verify that we're getting a valid value.

And we saw this yesterday. When we did *not* have the guard clauses, Smart Unit Tests created a test to see what would happen with a variable that was out of range:

|

| Tests *before* the guard clauses were added |

Test #5 shows uses a "currentState" of "2". The valid values are "0" (Alive) and "1" (Dead). And because we didn't have any checks in our code, this returns a valid result (actually, an invalid result from a business perspective, but a valid result as far as the compiler is concerned).

Updated Smart Unit Tests

When we run Smart Unit Tests against the new method with the guard clauses, we end up with a different set of tests:

|

| Tests *with* the guard clauses |

We still have the same tests (in a slightly different order), but we also have some new tests (and one test with a new result).

Just like we talked about yesterday, Smart Unit Tests goes through every code branch and generates tests with parameters to exercise those branches. The same is true for the guard clauses.

So, if we look at Test #3, we see a test with "8" as the "liveNeighbors" parameter. This is edge of the range for our first guard clause.

Then in Test #5, we see a test with "9" as the "liveNeighbors" parameter -- outside of the range of the guard clause. And notice the result: we get an ArgumentOutOfRangeException, and the error message matches what is generated by the method.

Is throwing an exception really considered a "passing" test? Absolutely. This is the behavior that we expect for an invalid parameter. Here's the actual test method that was generated:

Notice that there is an "ExpectedException" attribute. Normally a test that throws an exception results in a failure. But if we tell the testing framework that we expect to get an exception, then it becomes a "pass" -- in fact, it will fail if no exception is thrown.

Test #8 tests the other end of the first guard clause. It uses "int.MinValue" for the "less than 0" condition. And this generates the same exception we just looked at.

Finally, Test #7 looks at the value of our enum parameter. Before we added a guard clause, Smart Unit Tests did generate a test for this case -- "currentState = 2", but our method was not set up to catch this and returned a valid value.

But with the guard clause in place, this test now throws an exception.

Verifying Expectations

So it's interesting to see how Smart Unit Tests handles guard clauses. Just like our other code, it generates tests to exercise those code paths. And if there are exceptions, then it generates a test that assumes the exception is expected.

Again, these tests tell us what our code *actually* does not what it is *expected* to do. After generating these tests, it is vitally important that we analyze all of the generated tests and results. What we may find is that there is a test that throws an exception where we do *not* want an exception to be thrown. Smart Unit Tests does not know the intent of our code -- only what it actually does.

So, if our tests throw exceptions, we need to double check to make sure that it is the behavior that we want. Otherwise, we need to update our code and try again.

[Update 11/17/2014: Peli de Halleux from Microsoft Research was nice enough to send some clarification on how exceptions are handled by default. We explore this in the next article: Smart Unit Tests (Preview): Exploring Exceptions.]

Smart Unit Tests and Legacy Code

As I mentioned yesterday, I'm still trying to figure out how (or if) this technology fits into my workflow. There is one place where Smart Unit Tests is very compelling: working with legacy code.

If I walk up to an existing code base that does not have unit tests in place, I can run Smart Unit Tests against the code to generate an expected baseline behavior -- this is what the code does today. Then I can save off those tests and run them regularly as I make updates or refactor the code. These tests would let me know if I inadvertently changed existing behavior.

That is a very compelling use case for this technology.

Now there are also some questions when thinking about this scenario. If a legacy code base does not have tests (and maybe it's a bit of a mess as well), then it may be difficult to create tests due to dependencies between classes.

The example method that we've been using to generate tests is *extremely* simple. And it lends itself well to unit testing. It does not have dependencies on any external objects or methods. It only acts on the parameters being passed in. And it does not modify any shared state that affects other parts of the code. Because of this, it is very easy to test.

But when we get to more complex situations that may require dependency injection or mocking, it's not quite as easy to generate tests -- especially if the code is not loosely-coupled. (It looks like I'll be running Smart Unit Tests against different types of code to see how it reacts.)

Wrap Up

It will be very interesting to see how this technology evolves. We saw here that we can add guard clauses to our method and appropriate tests are added to exercise those code paths. I'm going to keep thinking about ways that I can integrate this technology into my programming workflow. It seems like there are a lot of possibilities out there.

Happy Coding!

Thursday, November 13, 2014

Smart Unit Tests (Preview) and Conway's Game of Life

I have a love/hate relationship with automated test generation. Visual Studio 2015 Preview has a new built-in feature: Smart Unit Tests. This is one of the features that we got a sneak peak of at the Microsoft MVP Summit last week and was announced publicly at the Connect(); event this week.

Smart Unit Tests came out of the Pex project from Microsoft Research. And it works by going through the code, finding all of the possible code paths, and generating unit tests for each scenario it finds. I want to emphasize that this is currently a preview. So, we aren't looking at final functionality here.

I downloaded the latest Visual Studio 2015 Preview yesterday (11/12/2014) so that I could give this a try. Let's see how it works with our Conway's Game of Life implementation.

[Update: This exploration of Smart Unit Tests has turned into a series. Get all the articles here: Exploring Smart Unit Tests (Preview).]

[Update: Smart Unit Tests has been released as IntelliTest with Visual Studio 2015 Enterprise. My experience with IntelliTest is largely the same as with the preview version as shown in this article.]

Generating Tests

A couple weeks ago, we implemented the rules for Conway's Game of Life using TDD and MSTest (and then we looked at parameterized tests with NUnit). We'll work with that same method.

Here's the code we ended up with:

Generating Smart Unit Tests is really simple: just right-click on the method (or class) and choose "Smart Unit Tests".

The result for this method is 5 tests:

Let's walk through how these are generated (this is the cool part about the technology). Here's how it figures out how to generate the tests.

Test #1

For the first test, it provides the default values for the parameters. Since "CellState" is a enum (which is actually an integer underneath), it uses the "0" value which happens to be "Alive". Since the "liveNeighbors" parameter is an integer, it just supplies "0".

The result when following this path is that it hits the "CellState.Alive" in the switch statement and then the "if" statement evaluates to "True" (since 0 is less than 2), so the result is "CellState.Dead".

Here's the actual test that was generated:

Other test methods look similar, so I'll let you use your imagination for those.

Test #2

For the next test, Smart Unit Tests recognized that we hit the "True" branch of the first "if" statement, so it supplies a parameter that would cause it to hit the "False" branch. In this case, it chooses "2" because the conditional includes "liveNeighbors < 2".

The result when following this path is that it just drops through to the "return" statement, so the value is unchanged: "Alive".

Test #3

For the next test, we take the next "case" in the "switch" statement. So, a test is created to check "CellState.Dead" and goes back to the default "0" value for number of live neighbors. The "if" statement evaluates to "False" (since it is not equal to "3").

The result from this path is that the value is unchanged: "Dead".

Test #4

For the next test, Smart Unit Tests figures out that the "liveNeighbors" parameter needs to be "3" so that it hits the other branch of the "if" statement. This way it will evaluate to "True".

The result from this path is that the value is updated to "Alive".

Test #5

The final test passes in a value for the CellState enum that will not be caught by the "switch" statement. This value is "2" (which is an invalid value for the enum, but still allowed since it is really just an integer). This does not hit any branches, and so the value is returned unchanged: "2".

Pretty Cool

So this technology is pretty cool. It is not just passing in random values for the parameters of the tests. It is actually walking through the code and keeping track of which branches are hit or not hit. Then it generates parameters to exercise each branch of the code.

Now, I wish that this had generated at least one more test with the parameters "Alive" and "4". This would catch the "liveNeighbors > 3" condition. I'm hoping that this will be updated in a future release (again, remember we are dealing with preview code here). Regardless, each code path *is* exercised.

These tests are dynamically generated, but we can save them off as regular MSTest methods that we run with our standard test runner. This is good because none of these tests show what the code is *expected* to do; this shows what the code *actually* does. So, it's really up to us to analyze the tests that are generated to see if the results are what we expect.

Once we have these tests saved off, then we'll be able to tell if code updates change the functionality because they would fail (just like our other tests). This reminds me of a similar workflow that we have with Approval Tests, where we say "yes, this is a valid result" and then are warned if we don't get that expected result.

I'm a Bit Cautious

Even though this technology is really cool, I still have some misgivings about it -- mainly based on my experience with various teams.

Not Good for TDD

Obviously, we're generating tests *after* we have the code, so it's not possible to use this technology in a TDD environment. As I've mentioned previously, I'm not quite at TDD in my own coding practices -- I'm more of a "test along side" person. This means that I write some code and then immediately write some unit tests to exercise the code. (This is much different from people who build their tests days or weeks after the code is written.)

Not Quite Exhaustive

Even though we are exercising all of the code paths, we aren't actually testing all of the valid values. Remember from our parameterized testing, we added test cases for both CellStates (Alive and Dead) and for the valid range of live neighbors (which is from 0 to 8). The result was 18 test cases:

This more closely matches our actual business case for this method.

Now, if we add code contracts, we can add valid ranges for our parameters. In this case, Smart Unit Tests would provide some tests for parameters outside of the range to make sure that it behaves as expected (such as by throwing an ArgumentOutOfRangeException). But it won't generate tests for all of the values in the valid range (again, this may change in future releases).

[Note: I experiment with guard clauses in the next article: More Smart Unit Tests (Preview): Guard Clauses.]

Developers Who Don't Understand Unit Testing

My biggest misgiving about auto-generated tests is more of a developer problem than a technical problem. I've worked with groups who consider success as having 100% code coverage in their tests. So a tool like this would simply encourage them to auto-generate tests and say that they are done.

It's easy to get a tool to report 100% code coverage, but it's much harder to actually have 100% coverage. For example, our generated tests above do give us 100% coverage, but they don't deal with 100% of the scenarios. For example, we really should put in a parameter check to make sure that "liveNeighbors" is between 0 and 8 (inclusive). But there's no way for the computer to know about that requirement that is missing from our code.

So I'm a bit wary of tools that make it easy for these types of teams to simply "right-click and done". This is great as a helper tool, but it should not replace our normal testing processes.

The Best Thing

The best thing about auto-generated tests is that we can use them to help fill in the gaps in our testing. In our scenario above, there was a test generated for a "CellState" of "2". This is out of range for our enum, but it is still valid code since the enum is ultimately just an integer.

I could use a test like this to say, "Whoah! No one should be passing in a '2' for that value." And then I could immediately add a range check to my method. The test would still be generated, but instead of the method returning normally, it would throw an exception -- which is exactly what we want.

Wrap Up

So like I said, I have a love/hate relationship with auto-generated testing. It is an extremely cool technology. And I really like how Smart Unit Tests is really smart -- it traverses the code to find all of the valid code paths. I'm going to be running this against a bunch of my other code to see what it comes up with.

But I am a bit wary as well. I'm afraid that some developers will use it to auto-generate the tests and then think nothing more about testing. That really scares me.

I'm going to be following this technology closely. And I expect to use it quite a bit to fill in the gaps in my testing. It will be interesting to see where this goes.

Happy Coding!

Smart Unit Tests came out of the Pex project from Microsoft Research. And it works by going through the code, finding all of the possible code paths, and generating unit tests for each scenario it finds. I want to emphasize that this is currently a preview. So, we aren't looking at final functionality here.

I downloaded the latest Visual Studio 2015 Preview yesterday (11/12/2014) so that I could give this a try. Let's see how it works with our Conway's Game of Life implementation.

[Update: This exploration of Smart Unit Tests has turned into a series. Get all the articles here: Exploring Smart Unit Tests (Preview).]

[Update: Smart Unit Tests has been released as IntelliTest with Visual Studio 2015 Enterprise. My experience with IntelliTest is largely the same as with the preview version as shown in this article.]

Generating Tests

A couple weeks ago, we implemented the rules for Conway's Game of Life using TDD and MSTest (and then we looked at parameterized tests with NUnit). We'll work with that same method.

Here's the code we ended up with:

Generating Smart Unit Tests is really simple: just right-click on the method (or class) and choose "Smart Unit Tests".

The result for this method is 5 tests:

Let's walk through how these are generated (this is the cool part about the technology). Here's how it figures out how to generate the tests.

Test #1

For the first test, it provides the default values for the parameters. Since "CellState" is a enum (which is actually an integer underneath), it uses the "0" value which happens to be "Alive". Since the "liveNeighbors" parameter is an integer, it just supplies "0".

The result when following this path is that it hits the "CellState.Alive" in the switch statement and then the "if" statement evaluates to "True" (since 0 is less than 2), so the result is "CellState.Dead".

Here's the actual test that was generated:

Other test methods look similar, so I'll let you use your imagination for those.

Test #2

For the next test, Smart Unit Tests recognized that we hit the "True" branch of the first "if" statement, so it supplies a parameter that would cause it to hit the "False" branch. In this case, it chooses "2" because the conditional includes "liveNeighbors < 2".

The result when following this path is that it just drops through to the "return" statement, so the value is unchanged: "Alive".

Test #3

For the next test, we take the next "case" in the "switch" statement. So, a test is created to check "CellState.Dead" and goes back to the default "0" value for number of live neighbors. The "if" statement evaluates to "False" (since it is not equal to "3").

The result from this path is that the value is unchanged: "Dead".

Test #4

For the next test, Smart Unit Tests figures out that the "liveNeighbors" parameter needs to be "3" so that it hits the other branch of the "if" statement. This way it will evaluate to "True".

The result from this path is that the value is updated to "Alive".

Test #5

The final test passes in a value for the CellState enum that will not be caught by the "switch" statement. This value is "2" (which is an invalid value for the enum, but still allowed since it is really just an integer). This does not hit any branches, and so the value is returned unchanged: "2".

Pretty Cool