Primary constructors seems to be one of those polarizing features in C#. Some

folks really love them; some folks really hate them. As in most cases, there

is probably a majority of developers who are in the "meh, whatever" group. I have been

experimenting and reading a bunch of articles to try to figure out where/if I

will use them in my own code.

I have not reached any conclusions yet. I have a few examples of where I would

not use them, and a few examples of where I would. And I've come across some

scenarios where I can't use them (which affects usage in other areas because I

tend to write for consistency).

Getting Started

To get started understanding primary constructors, I recommend a couple of articles:

The first article is on Microsoft devblogs, and David walks through changing

existing code to use primary constructors (including using Visual Studio's

refactoring tools). This is a good overview of what they are and how to use

them.

In the second article, Marek takes a look at primary constructors and adds

some thoughts. The "Initialization vs. capture" section was particularly interesting to me. This

is mentioned in David's article as well, but Marek takes it a step further and

shows what happens if you mix approaches. We'll look at this more closely

below.

Very Short Introduction

Primary constructors allow us to put parameters on a class declaration itself.

The parameters become available to the class, and we can use this feature to

eliminate constructors that only take parameters and assign them to local

fields.

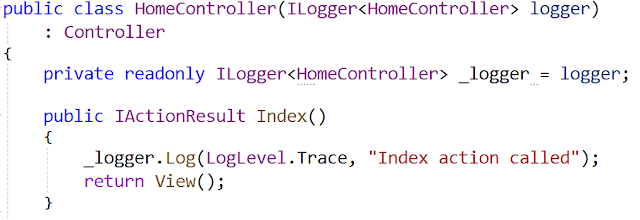

Here's an example of a class that does not use a primary constructor:

public class HomeController : Controller

{

private readonly ILogger<HomeController> _logger;

public HomeController(ILogger<HomeController> logger)

{

_logger = logger;

}

public IActionResult Index()

{

_logger.Log(LogLevel.Trace, "Index action called");

return View();

}

// other code

}

In a new ASP.NET Core MVC project, the HomeController has a _logger field that

is initialized by a constructor parameter. I have updated the code to use the _logger field in the

Index action method.

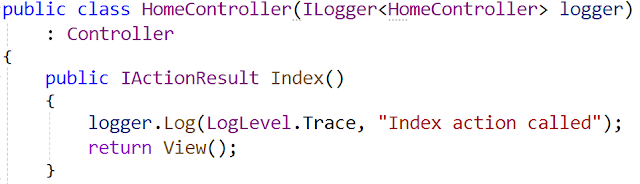

The code can be shorted by using a primary constructor. Here is one way to do

that:

public class HomeController(ILogger<HomeController> logger)

: Controller

{

private readonly ILogger<HomeController> _logger = logger;

public IActionResult Index()

{

_logger.Log(LogLevel.Trace, "Index action called");

return View();

}

// other code

}

Notice that the class declaration now has a parameter (logger), and the

separate constructor is gone. The logger parameter can be used throughout the

class. In this case, it is used to initialize the _logger field, and we use

the _logger in the Index action method just like before. (There is another way

to handle this that we'll see a bit later).

So by using a primary constructor, we no longer need a separate constructor,

and the code is a bit more compact.

Fields or no Fields?

As David notes in his article, Visual Studio 2022 offers 2 different

refactoring options: (1) "Use primary constructor" and (2) "Use primary

constructor (and remove fields)". (Sorry, I couldn't get a screen shot of that

here because the Windows Snipping Tool was fighting me.)

The example above shows what happens if we choose the first option. Here is

what we get if we chose the second (and remove the fields):

public class HomeController(ILogger<HomeController> logger)

: Controller

{

public IActionResult Index()

{

logger.Log(LogLevel.Trace, "Index action called");

return View();

}

// other code

}

In this code, the separate _logger field has been removed. Instead, the

parameter on the primary constructor (logger) is used directly in the class. A

parameter on the primary constructor is available throughout the class

(similar to a class-level field), so we can use it anywhere in the class.

Guidance?

Here's where I hit my first question: fields or no fields? The main difference

between the 2 options is that a primary constructor parameter is

mutable.

This means that if we use the parameter directly (option 2), then it is

possible to assign a new value to the "logger" parameter. This is not likely in this scenario, but it is possible.

If we maintain a separate field (option 1), then we can set the field readonly.

Now the value cannot be changed after it is initialized.

One problem I ran into is that there is not a lot of guidance in the Microsoft

documentation or tutorials. They present both options and note the mutability,

but don't really suggest a preference for one over the other.

The Real Difference

Reading Marek's article opened up the real difference between these two

approaches. (Go read the section on "Initialization vs. capture" for his

explanation.)

The compiler treats the 2 options differently. With option 1 (use the

parameter to initialize a field), the parameter is discarded after

initialization. With option 2 (use the parameter directly in the class), the

parameter is captured (similar to a captured variable in a lambda expression

or anonymous delegate) and can be used throughout the class.

As Marek notes, this is fine unless you try to mix the 2 approaches with the same

parameter. For example, if we use the parameter to initialize a field and then

also use the parameter directly in our code, we end up in a bit of

a strange state. The field is initialized, but if we change the value of the

parameter later, then the field and parameter will have different values.

If you try the mix and match approach in Visual Studio 2022, you will get a

compiler warning. In our example, if we assign the logger parameter to a field

and then use the logger parameter directly in the Index action method, we get the following warning:

Warning CS9124 Parameter 'ILogger<HomeController> logger' is captured into the state

of the enclosing type and its value is also used to initialize a field,

property, or event.

So this tells us about the danger, but it is a warning -- meaning the code

will still compile and run. I would rather see this treated as an error. I pay

pretty close attention to warnings in my code, but a lot of folks do not.

Fields or No Fields?

I honestly have not landed on which way I'll tend to go with this one. There may be other factors involved. I look at consistency and criticality below -- these will help me make my decisions.

Consistency and Dependency Injection

A primary use case for primary constructors is dependency injection --

specifically constructor injection. Often our constructors will take the

constructor parameters (the dependencies) and assign them to local fields.

This is what we've seen with the example using ILogger.

So, when I first started experimenting with primary constructors, I used my

dependency injection sample code. Let's look at a few samples. You can find

code samples on GitHub: https://github.com/jeremybytes/sdd-2024/tree/main/04-dependency-injection/completed, and I'll provide links to specific files.

Primary Constructor with a View Model

In a desktop application sample, I use constructor injection to get a data

reader into a view model. This happens in the "PeopleViewModel" class in the

"PeopleViewer.Presentation" project (link to the

PeopleViewModel.cs file).

public class PeopleViewModel : INotifyPropertyChanged

{

protected IPersonReader DataReader;

public PeopleViewModel(IPersonReader reader)

{

DataReader = reader;

}

public async Task RefreshPeople()

{

People = await DataReader.GetPeople();

}

// other code

}

This class has a constructor parameter (IPersonReader) that is assigned to a

protected field.

We can use a primary constructor to reduce the code a bit:

public class PeopleViewModel(IPersonReader reader)

: INotifyPropertyChanged

{

protected IPersonReader DataReader = reader;

public async Task RefreshPeople()

{

People = await DataReader.GetPeople();

}

// other code

}

This moves the IPersonReader parameter to a primary constructor.

Using a primary constructor here could be seen as a plus as it reduces the code.

As a side note: since the field is protected (and not private), Visual Studio

2022 refactoring does not offer the option to "remove fields".

Primary Constructor with a View?

For this application, the View needs to have a View Model injected. This

happens in the "PeopleViewerWindow" class of the "PeopleViewer.Desktop"

project (link to the

PeopleViewerWindow.xaml.cs file).

public partial class PeopleViewerWindow : Window

{

PeopleViewModel viewModel { get; init; }

public PeopleViewerWindow(PeopleViewModel peopleViewModel)

{

InitializeComponent();

viewModel = peopleViewModel;

this.DataContext = viewModel;

}

private async void FetchButton_Click(object sender, RoutedEventArgs e)

{

await viewModel.RefreshPeople();

ShowRepositoryType();

}

// other code

}

The constructor for the PeopleViewerWindow class does a bit more than just

assign constructor parameters to fields. We need to call InitializeComponent

because this is a WPF Window. In addition, we set a view model field and also

set the DataContext for the window.

Because of this additional code, this class is not a good candidate for a

primary constructor. As far as I can tell, it is not possible to use a primary constructor here. I have tried several approaches, but I have not found a

way to do it.

Consistency

I place consistency pretty high on my list of qualities I want in my code. I learned this from a team that I spent many years with. We had about a dozen developers who built and supported a hundred

applications of various sizes. Because we had a very consistent approach to

our code, it was easy to open up any project and get to work. The consistency

between the applications made things familiar, and you could find the bits

that were important to that specific application.

I still lean towards consistency because humans are really good at recognizing

patterns and registering things as "familiar". I want to take advantage of that.

So, this particular application, my tendency would be to not use

primary constructors. Constructor injection is used throughout the

application, and I would like it to look similar between the classes. I

emphasize "this particular application" because my view could be different if

primary constructors could be used across the DI bits.

Incidental vs. Critical Parameters

When it comes to deciding whether to have a class-level field, I've also been

thinking about the difference between incidental parameters and critical

parameters.

Let me explain with an example. This is an ASP.NET Core MVC controller that

has 2 constructor parameters:

public class PeopleController : Controller

{

private readonly ILogger<PeopleController> logger;

private readonly IPersonReader reader;

public PeopleController(ILogger<PeopleController> logger,

IPersonReader personReader)

{

this.logger = logger;

reader = personReader;

}

public async Task<IActionResult> UseConstructorReader()

{

logger.Log(LogLevel.Information, "UseContructorReader action called");

ViewData["Title"] = "Using Constructor Reader";

ViewData["ReaderType"] = reader.GetTypeName();

IEnumerable<Person> people = await reader.GetPeople();

return View("Index", people.ToList());

}

// other code

}

The constructor has 2 parameters: an ILogger and an IPersonReader. In my mind,

one of these is more important than the other.

IPersonReader is a critical parameter. This is the data source for the

controller, and none of the controller actions will work without a valid

IPersonReader.

ILogger is an incidental parameter. Yes, logging is important. But if the

logger is not working, my controller could still operate as usual.

In this case, I might do something strange: use the ILogger parameter

directly, and assign the IPersonReader to a field.

Here's what that code would look like:

public class PeopleController(ILogger<PeopleController> logger,

IPersonReader personReader) : Controller

{

private readonly IPersonReader reader = personReader;

public async Task<IActionResult> UseConstructorReader()

{

logger.Log(LogLevel.Information, "UseContructorReader action called");

ViewData["Title"] = "Using Constructor Reader";

ViewData["ReaderType"] = reader.GetTypeName();

IEnumerable<Person> people = await reader.GetPeople();

return View("Index", people.ToList());

}

// other code

}

I'm not sure how much I like this code. The logger parameter is used directly (as noted above, this is a captured value that can be used throughout the class). The personReader parameter is used for initialization (assigned to the reader field).

Note: Even though it looks like we are mixing initialization and capture, we are not. The logger is captured, and the personReader is used for initialization. We are okay here because we have made a decision (initialize or capture) for each parameter, so we will not get the compiler warning here.

To me (emphasizing personal choice here), this makes the IPersonReader more obvious -- it has a separate field right at the top of the class. The assignment is very intentional.

In contrast, the ILogger is just there. It is available for use, but it is not directly called out.

These are just thoughts at this point. It does conflict a bit with consistency, but everything we do involves trade-offs.

Thoughts on Primary Constructors and C#

I have spent a lot of time thinking about this and experimenting with different bits of code. But my thoughts are not fully formed yet. If you ask me about this in 6 months, I may have a completely different view.

I do not find typing to be onerous. When it comes to code reduction, I do not think about it from the initial creation standpoint; I think about it from the readability standpoint. How readable is this code? Is it simply unfamiliar right now? Will it get more comfortable with use?

These are all questions that we need to deal with when we write code.

Idiomatic C#?

In Marek's article, he worries that we are near the end of idiomatic C# -- meaning a standard / accepted / recommended way to write C# code (see the section "End of idiomatic C#?"). Personally, I think that we are already past that point. C# is a language of options that are different syntactically without being different substantially.

Expression-Bodied Members

For example, whether we use expression-bodied members or block-bodied members does not matter to the compiler. They mean the same thing. But they are visually different to the developer, and human minds tend to think of them as being different.

var

People get too attached to whether to use var or not. I have given my personal views in the past (Demystifying the "var" Keyword in C#), but I don't worry whether anyone else adopts them. This has been treated as a syntax difference without a compiler difference. But that changed with the introduction of nullable reference types: var is now automatically nullable (C# "var" with a Reference Type is Always Nullable). There is still a question of whether developers need to care about this or not. I've only heard about one edge case so far.

Target-typed new

Another example is target-typed new expressions. Do you prefer "List<int>? ids = new()" or "var ids = new List<int>()"? The compiler does not care; the result is the same. But humans do care. (And your choice may be determined by how attached to "var" you are.)

No More Idiomatic C#

These are just 3 examples. Others include the various ways to declare properties, top level statements, and whether to use collection initialization syntax (and on that last one, Visual Studio has given me some really weird suggestions where "ToList()" is fine.)

In the end, we need to decide how we write C# moving forward. I have my preferences based on my history, experience, competence, approach to code, personal definition of readability, etc. And when we work with other developers, we don't just have to take our preferences into account, but also the preferences and abilities of the rest of the team. Ultimately, we each need to pick a path and move foreward.

Happy Coding!