Here's how I built it and why.

Note: I'm not pretending that this is anything amazing. But I'm not often in the web front-end world, so this was a bit of a challenge for me. In addition, I realized that I was able to put this web application together relatively quickly because I understood the conventions of ASP.NET MVC; I'll be going into detail about those conventions in a later article.

Update Feb 2020: The later article is now available: The Secret Code: ASP.NET MVC Conventions.

Code samples are available on GitHub: jeremybytes/mazes-for-programmers.

The Existing Application

Previously, I wrote some C# code to generate mazes based on the book Mazes for Programmers by Jamis Buck (Amazon link).

The result was a console application that generated text output and graphical output.

If you look closely, you will find that these outputs represent the same maze.

You can run this code yourself by running the "DrawMaze" project from the GitHub repo mentioned above.

Shortcomings

The shortcomings of the application can be seen in the Main method of the Program.cs file (from the Program.cs file in the DrawMaze project):

The Main method creates a MazeGenerator object and passes in a ColorGrid (that represents the maze grid itself) and a RecursiveBacktracker -- this is the maze algorithm that will be used.

But there are more algorithms to use. Here's the listing from the "Algorithms" project in Visual Studio (also in the Algorithms project on GitHub):

One of the main things I wanted to do was to see the differences in the algorithms. Some are biased along a diagonal, some generate longer paths, some generate twistier paths. For more information on the algorithms themselves, take a look at the articles listed in the README on GitHub.

To switch to a different algorithm with the console application, I had to recompile the application. The same is true for changing the size and color of the maze.In addition, the current implementation is not cross-platform due to the way the graphical maze is displayed. See this article for an explanation of that: Converting .NET Framework to .NET Core -- UseShellExecute has a Different Default Value.

Solution

- Cross-Platform: A browser-based solution would fix my cross-platform problem. Instead of saving the output to a file, I would stream it to a web page. This would work on any supported platform that has a web browser.

- Parameters: A query string on the web page would allow me to change the algorithm, size, and color by changing the URL (no recompiling).

- Parameter Selection: A page with data entry fields would eliminate the need to craft a query string for parameters.

False Starts and Missteps

I had several false starts and missteps along the way. For example, I started with using the "ASP.NET Core MVC" template on a new project. I got the basics working, but then everything fell apart when I tried to add parameters.

Then I tried starting with the "Empty ASP.NET Core" template. I got the site working (meaning, I could stream the image to the browser and even collect the parameters), but it was a bit of a mess since the template did not include any pages that I could start with for parameter collection.

So I ended up taking what I learned from the "empty" project and starting over again with the "MVC" project. I got that project working (mostly) as I want it to, so that's where things are today.

I won't go into those details here. That's something you'd get from watching a Twitch stream (and I move too slowly to make a Twitch stream interesting). I mainly wanted to get across that things don't work out the first time, and there's often fumbling and frustration involved.

Adding a MazeController

In the web application, I added a MazeController class. With the default routing that is set up in ASP.NET Core MVC, this means that I could navigate to "https://{base-address}/maze" to get to the page.

Again, I will cover this default routing scheme in an upcoming article.

Update Feb 2020: The later article is now available: The Secret Code: ASP.NET MVC Conventions.

Getting a Bitmap of the Maze

What I needed was a bitmap of the maze to stream back to the browser. Let's go back and look at the "Program.cs" file from the console application (from the Program.cs file in the DrawMaze project):

Above, we saw the MazeGenerator object. But we need to see how that is used. This is in the CreateAndShowMaze method (also in the Program.cs file in the DrawMaze project):

This calls "GenerateMaze" on the MazeGenerator.

"GetTextMaze" returns a string that represents the maze in text format.

"GetGraphicaMaze" returns a bitmap. This is the thing that we actually need.

It seems strange to have "GenerateMaze" as a separate step, but this gives us better control over when a new maze is generated so that we can display the same maze in different output formats. As a side note, if you call either of the "Get" methods when a maze has not already been generated, it will automatically generate the maze.

Extracting Things Out

For the "MazeController", I needed to have a "MazeGenerator" to work with. In the original code, the "MazeGenerator" was part of the console application project. So I moved the "MazeGenerator" class (and the "IMazeGenerator" interface) into a separate class library. This way, I could reference it from the console application and the web application. This code is in the "MazeGeneration" project on GitHub.

The Initial MazeController

Now back to the MazeController class. We can use most of the same code as the console application. This code is in the MazeController.cs file in the GitHub project.

Note that the code on GitHub is a bit more complex than what we will see in this section because it includes the parameters.

Here is the initial "Index" method:

Using the default routing in ASP.NET Core MVC, if we navigate to "https://{base-address}/maze", the "Index" method on the "MazeController" class will be called. This method returns an IActionResult. The IActionResult can be a variety of things. We'll talk about that a bit more in an upcoming article.

This method has 3 steps. (1) it generates the bitmap image that we want to return, (2) it changes the bitmap into a byte array, (3) it returns that byte array with a content type. The result of the last 2 steps is to stream an image file back to the browser (I stole this code from the internet).

The "File" that we use here is not a file on the file system that we think about normally. This a method in ControllerBase that returns a "FileContentResult". Again, we won't go into those details here, but stay tuned for another article.

Here is the code from the "Generate" method (again, it will look a bit different in the GitHub repo):

This is the same code that we had in the console application. It creates an instance of the MazeGenerator and then calls "GetGraphicalMaze".

The "ConvertToByteArray" method turns our image into a byte array that we can use for streaming:

This uses a MemoryStream to save the image to a byte array. In this case, we are using the "PNG" format for the image.

Just Enough Code

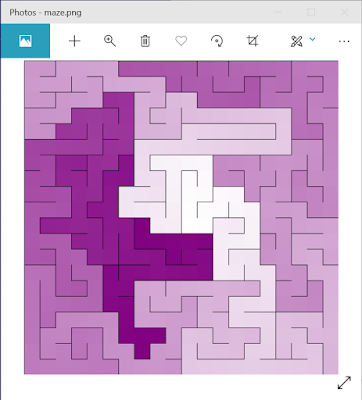

This is just enough code to get a result. We can run the web application and navigate to "https://localhost:5001/maze" and get the following result:

Success!

I was pretty excited when I got this to work. It's a small step (particularly to developers who do this all the time), but it was something new for me.

Now that I had the basics working, I could work on adding parameters.

Adding Parameters

For the parameters, I take advantage of some of the magical auto-wiring that happens with ASP.NET Core MVC.

To start with, I added parameters to the "Generate" method (this is the final method from the MazeController.cs file):

This lets us pass in the size, algorithm, and color.

Note that color is a "MazeColor". This is an enumeration with the valid values in it. Due to some weirdness in the way the colors are generated, there are a limited number that we can choose from (at least for now -- I might spend some time changing this).

The "MazeColor" enum is in the "ColorGrid.cs" file in the MazeGrid project:

The values are White (default), Teal, Purple, Mustard, Rust, Green (more of a pea green), and Blue.

The Size Parameter

We'll add parameters to the "Index" method one at a time so we can see how this works. Here's an updated "Index" method with a "size" parameter added (this is not the final version of the MazeController.cs file in MazeWeb):

In this method, we created some default values for the new parameters of the "Generate" method. The size defaults to 15, the algorithm to the Recursive Backtracker, and the color to purple.

But we also added a "size" parameter to the Index method. And this is where things get fun.

ASP.NET Core MVC tries to fill in parameters however it can.It will check query strings, form values, and routes to see if it can find a matching value. If it finds something it can use, it fills it in; otherwise, it leaves the default.

So here, we're looking for a "size" value. If it doesn't exist, then our parameter will be the default value for an integer (which is 0). In the body of the method, we check to see if the "size" parameter has a value greater than 0. If it does, then we use it; otherwise we use the value of "15".

Setting Size with a Query String

Let's try things out. First, we'll use the same URL that we did before: https://localhost:5001/maze

This uses the default size of "15" (and we can see it is smaller than the default "20" size that we had earlier).

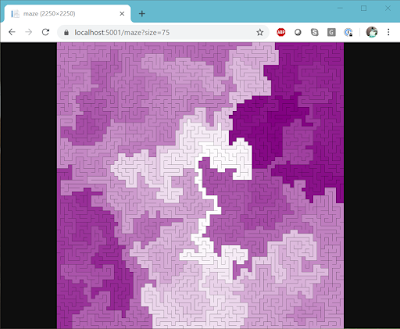

But we can add a query string to set the size: https://localhost:5001/maze?size=75

And now we can see that we can set the size to whatever we want.

Setting the Color

Next, we'll set the color. For this, we'll add another parameter to the Index method (again, this is not the final version of the MazeController.cs file in MazeWeb):

Here we added a "MazeColor" parameter to the method. Notice also that we got rid of our variable that was set to purple.

Let's see how the page behaves now. First, we'll pass in "Green" using this URL: https://localhost:5001/maze?size=50&color=Green

Even though the type is an enumeration, the ASP.NET Core MVC infrastructure does a good job at parsing the parameter. It figured out that even though we're passing in a "string" as part of the query string, it converted that to the correct value of the enum.

But what if we pick something that doesn't match up, like pink? Let's try it: https://localhost:5001/maze?size=50&color=Pink

This gives us no color at all. Well technically, the selection is "White". Notice in the enumeration that we showed earlier, that "White" is set to the value "0". Enums are integers underneath. So if ASP.NET Core MVC cannot parse the parameter (or find it at all), then it uses the default value for that type. In the case of integers (and enums) that value is "0". The result is that we end up with a "White" grid.

If we were to leave the parameter off completely, then we would also get a white grid.

Setting the Algorithm

The last parameter is the algorithm. I saved this for last since it is the most complex. Here is the final code for the "Index" method (this is the one that you'll find in the MazeController.cs file in the MazeWeb project):

The "algo" parameter has been added as a string type. That makes things a little more complicated since the "Generate" method needs an instantiated object that implements the "IMazeAlgorithm" interface.

Let's walk through the middle part of the code.

First, we still have the "algorithm" variable that is set to a "RecursiveBacktracker". We need to keep this in case we are unable to parse the parameter.

Next, we have an "if" statement to see if the parameter is empty. If it is empty, then we will use our default.

If it is not empty, then we will use reflection to try to load an object from an assembly. In this case, all of the maze algorithms are in the same assembly (the Algorithms project).

The first line in the "if" block loads the assembly that the "RecursiveBacktracker" is in. This gives us access to all of the algorithms in that assembly.

Next, we use the "GetType" method on the assembly to try to locate the algorithm. The first parameter of the "GetType" method is the fully-qualified type name. So we want something like "Algorithms.RecursiveBacktracker". We don't expect someone to pass in the namespace ("Algorithms") as part of the query string, so we add it on here.

The second parameter of "GetType" is whether we want an error to throw an exception. In our case, we'll say "no exceptions".

The third parameter of "GetType" is case-insensitivity. The "true" value means case doesn't matter. I primarily set this because when I was testing I kept typing "SideWinder" instead of "Sidewinder".

If we can find the type successfully (meaning the "algo" variable is not null), then we use the Activator to create an instance of the type. The "CreateInstance" method returns "object", so we need to cast it to the "IMazeAlgorithm" interface. By using the "as" operator, we will not get an exception if the cast fails. If it fails, we get "null".

In looking at this code, I need a bit more safety built in (in case we end up with a null). It's not too big of a worry since the only types in the assembly are algorithms that implement IMazeAlgorithm. But it probably should be hardened up a bit.

By the way, I know these things about Assembly.GetType because I've been looking into that method as well as Type.GetType and how assemblies are loaded in another set of articles: Using Type.GetType with .NET Core & Type.GetType Functionality has not Changed in .NET Core. Plus, I've been working on a follow-up.

Setting the Algorithm

So let's try this. Let's try the "BinaryTree" algorithm: https://localhost:5001/maze?size=30&color=Blue&algo=BinaryTree

The Binary Tree algorithm has a strong diagonal bias (meaning it tends to create diagonal paths through the center of the maze). So we can see that this algorithm is working.

Here's another using "HuntAndKill": https://localhost:5001/maze?size=30&color=Blue&algo=HuntAndKill

This tends to create long paths.

Better Parameter Collection

So things are working. But it is a bit fiddly. Right now, someone needs to know how to create a query string with the right values to use this. It would be much better if we could collect parameters.

And that's what I did. I modified the "Index.cshtml" file for the "Home" view. You can see the file on GitHub: Index.cshtml file in the "Views/Home" folder.

I won't walk through all of the details, but let's hit some highlights. If you're not familiar with ASP.NET Core MVC, I'll cover these bits in more detail in the upcoming article mentioned above.

First, let's look at the "form" element that was added to the page:

The "action" tells us that this is the same as navigating to "/maze" (which is what we have been doing manually). This has the effect of posting the values in the form back to the MazeController.

Before we look at the contents of the form, notice the "@model" at the top of the page. Here is the "MazeModel" object mentioned there (from the MazeModel.cs file in the Models folder):

This is a set of properties that represent the parameters that we need for the web page.

Since these are part of the model, we can create UI elements for them fairly easily. Here are the "Size" and "Color" parameters in the form (from the Index.cshtml file in the Views/Home folder):

The "Html.TextBoxFor" method creates a text box for the "Size" property in the model. (The "@Value" part is supposed to set a default for that field, but it's not working right -- there's still more work to do).

The "Html.DropDownListFor" method creates a drop-down list for the Colors. Here we can match up the value the user sees (the "Text") with the value that we pass to the controller ("Value"). In this case, the text and the value are the same.

The biggest advantage that we get from the drop-down list is that we have a selection of valid values to choose from. No more putting in "Pink" and getting unexpected results.

Things are similar for the algorithm selection:

In this case, the text and values are a little different. The good part is that we know what the valid values are, and we also don't need to worry about typing in things just right.

This is enough to get our parameter collection screen working.

And now it's really easy to run mazes of different sizes in different colors using different algorithms!

Don't forget that you can get the final code on GitHub: jeremybytes/mazes-for-programmers.

How Does It Work?

How does the controller get the parameters? If we look at the address box in the browser, they aren't being sent as a query string. Since the "form" is set up as a "post", the form data is posted to the MazeController. As mentioned earlier, ASP.NET Core MVC is really good at figuring out parameters on controller methods.

It looks in the form data and finds values that match up with the parameters. The parameter names are not exactly the same (the case is different for one thing). But the infrastructure is able to figure everything out. It's pretty cool once you get used to it.

Hitting Goals

After all this, did I hit the goals for this project?

Cross-Platform?

Yes! I confirmed the solution works on macOS. Here's a screenshot of it running locally using Safari:

One note about a problem I ran into: The first time I ran the application, I got a GDI+ runtime error, specifically about a missing dll. Fortunately, a quick search came up with this articile: SOLVED: System.Drawing .NETCore on Mac, GDIPlus Exception.

The solution is to install the GDI+ mono library. On macOS, this is easy to install with "brew install mono-libgdiplus". Check the article for more details.

Update March 2020: For Ubuntu (and other Linux distributions that use apt-get), you can get the GDI+ library with "apt-get install libgdiplus". I found out about this while putting together a Docker image to run this application.

Parameters?

Yes! The application has parameters for maze size, color, and algorithm. Putting together a query string in the right format allows this to be changed quickly.

Parameter Selection?

Yes! Even though parameters are available, using a query string is a bit brittle. It is easy to get wrong and hard to know which values are valid. The parameter web page gives us easy access to the available parameter values and makes things much less brittle.

Overall, I hit the problems that I was trying to solve with this approach.

Wrap Up

As mentioned, this isn't groundbreaking. But I had fun doing it. There's still a few things to fix. I haven't figured out how to get default values into my parameter form. I've tried a few things (including the current code that we saw above), but haven't had luck so far.

In addition, rather than streaming back a file that takes up a whole page, it would be interesting to stream back the file to another page (I actually had that working, but couldn't figure out how to do the parameters). So I'll be pulling this out from time to time.

And I owe you another article as well. I spent a few hours putting this website together (even with the problems that I ran into). I realize that the reason I was able to put it together so quickly is that I know the conventions of ASP.NET Core MVC, in particular how the controllers and views hook up, how the default routing works, how query string parameters get to controller methods, and how form data gets to controller methods. I'll go through all of these things in a future article.

Update Feb 2020: An article about conventions is now available: The Secret Code: ASP.NET MVC Conventions.

Happy Coding!