So I got a new book, and I was pretty excited about it: Mazes for Programmers by Jamis Buck (Amazon link).

I figured this would be a good chance for me practice some functional programming -- and then I did it completely wrong.

Making Mazes

After reading the first 2 chapters, I was well on my way to creating mazes. I created some using the Binary Tree algorithm:

And some using the Sidewinder algorithm:

It's not hugely exciting so far, but it's a start. And it's nice to have something visual after just a little bit of work.

Very Object-Oriented Code

These mazes weren't created using functional programming, they were created with OO code (which I'll talk about in a bit). The book samples are written in Ruby. Here's the Binary Tree algorithm:

I'm not much of a Ruby programmer, so I did the same thing in C#:

You can grab my code from GitHub if you're interested: jeremybytes: mazes-for-programmers.

Functionally Challenged

So my goal is to get this running with F# because I need to practice to get comfortable with functional programming. I followed the book code in C# because I figured I'd have a better chance of understanding what was going on after I had a working version.

Yes, the code is very object-oriented: there is a Grid class consisting of a set of cells and also knows how to display itself; and there is a Cell class that knows how to link itself to other cells. I figured it was pretty safe to start with the algorithms, though.

If we look at the "Binary Tree" code above, we have a static method that takes a grid as a parameter and returns a grid. It doesn't rely on anything external to itself. This looked like a really good candidate to be converted over to a functional style using F#.

I didn't get very far. In fact, I created a complete mess (that didn't work). I ended up deleting everything I had.

I tried taking the C# code and breaking out bits and pieces into separate functions: the part that get the collection of neighbors of a cell, the part that creates a link based on a random value. The goal was to use these function in a mapping of the entire cell collection.

I wish I had kept the code just so I could show how wrong a direction I was headed.

Trying Again

I'm going to take another shot at this, but I need to understand more of the big picture first. For example, each Cell object knows about all the cells that it's "linked" to. So for example, Look at this picture:

The circled cell object knows that it is linked to 2 other cells: one on the "east" and one on the "south". But more than that, the cell has references to those other 2 cells.

What this means is that by starting with any individual cell object, we can create a graph of the entire maze by walking the links for each cell. This becomes important in Chapter 3 where we start looking for ways to solve a maze using Dijkstra's Algorithm.

Mazes for Programmers intentionally stays away from graph theory. But I think I'm going to have to learn some graph theory if I want to approach this from a more sane direction in the functional world.

Taking the existing objects which are linked to other objects which are linked to other objects... that's going to create a bit of a mess (at least with my current knowledge of these things).

Continuing

First tries aren't always successful. In fact, they can be a huge learning experience when you discover that you're headed in the wrong direction. When we recognize the wrong direction, we can step back and look at other options.

I'm going to continue coding through the book with C#. This will help me understand the problem space and the solutions. Along the way, I'll start looking into potential functional ways of implementing the code.

Rather than taking the current objects and shoving them into F#, I'm pretty sure there's a better approach. But for that, I'll need to do a bit more learning. Keep an eye out here (and on the GitHub code) to see where I end up.

Happy Coding!

Showing posts with label Functional Programming. Show all posts

Showing posts with label Functional Programming. Show all posts

Wednesday, March 15, 2017

Monday, March 6, 2017

Rediscovering Fire: Effective Learning?

So I've got a bit of a dilemma. I ran across an article today (thanks to Tomas Petricek (@tomaspetricek) for pointing it out), and after drilling into it a bit, I felt like I've been rediscovering fire while I've been learning functional programming.

So here's the question:

The article in question is from Eirik Tsarpalis (@eiriktsarpalis): F# and Purity. This is a great read, particularly if you've been trying to reconcile functional and imperative programming (like I have). Go read it now.

But as I read the article (and started following some of the links), I found quite a few things that looked familiar.

Down the Rabbit Hole

When I started learning functional programming, I tried to be "pure", meaning that I wanted to use functional concepts all the way down. Eirik points to an article about Fibonacci Sequences by Adam Esterline: Fibonacci Sequence in Haskell. If you've tried to write Fibonacci sequences in Haskell, go read this now.

Haskell by it's nature is a "pure" functional language in that it doesn't let you do those imperative things that we have access to in things like F#. But there are still different ways to implement the Fibonacci sequence.

Adam points to the "Slow but Easy" method using recursion. This looked very familiar, because I created something very similar, thinking that recursion was the "right" solution to this problem.

Here's my code (more on this here: A Functional Fibonacci Sequence (as learned by an imperative programmer)):

This code works, but it is *SLOW*. It takes over 20 seconds to calculate the 35th place. And it just gets slower from there.

Adam shows a similar slowness and points to a solution in Haskell using "zipWith". This is a solution that I ran across as well:

This solution is *FAST*. I thought I understood how this worked (and tried to explain it a bit in my article), but Adam points to a better explanation on StackOverflow from someone who understands how Haskell works: Understanding a Recursively Design List. (You should definitely read this as well.)

Granted, Adam's article was published after mine, but the StackOverflow answer was published years before mine. So this information has been around for a while.

So I struggled through this problem (speed of the recursive implementation), and I asked questions from functional devs who have much more experience than I do. This questioning led me to look for other solutions, and it made me understand that I wasn't "doing it wrong" because I didn't use a standard recursive function implementation.

Popping the Stack

So back to Eirik's article. The core of the article talks about how "pure" functional programming is all based on mathematical functions, but our CPUs and environments aren't really set up to deal with these things. Instead, our environments (in my case, Windows and .NET) are really set up to deal with imperative programming.

The result is that if we program only with pure functions, we often run into issues of performance, heap usage, and even stack overflows (which I'm pretty good at generating).

The solution is a compromise: we keep our functions externally "pure", meaning discrete input leads to deterministic output, no mutation or shared state, etc. But we can use imperative code inside of those functions. We just need to make sure the imperative bits don't leak out of our functions.

Again, this sounded really familiar. When I was working on my Digit Recognition application, I was using code from Mathias Brandewinder's book on machine learning. I ran into some performance issues. The functions that he created were great for batch processing, but I was running the functions in a different way, which meant I was running them over and over again.

Here's the original function (you can read more about it here: Exploring the Digit Recognizer with F#):

To me, this seemed like the "right" way of coding this. It was using the functions on the Array object, and it was piping results from one function to another. But this was *SLOW*.

I had a chance to talk to Mathias about this. I showed him my F# code (above) and also the C# code that I had (which used "for" loop). The C# code was significantly faster. So he said that we could do the same thing in the F# code:

This made things significantly faster. I'm really glad that Mathias recommended this because he is a serious functional programmer, and it was like it gave me permission to cheat.

But when I looked at it more closely, it really wasn't cheating -- for the same reasons that Eirik gives in his article. In this case, there is mutable state inside the function, but that mutation never leaks out. It is contained in the function itself. From the outside, this function is "pure" it takes 2 arrays and returns a deterministic result.

(If you're interested in the Digit Recognizer project, here's where you can find all the articles: Machine Learning (sort of)).

Rediscovering Fire

I feel like quite a bit of my learning in programming has been about rediscovering fire. These are just two examples from the functional programming world. And that brings us back to the original question:

Pretty much every talk that I do is based on some pain or hurdle that I had in my development career. My goal is to help other developers over those hurdles. I get stuck easily, and I also get easily frustrated. I don't want other folks to get stuck and frustrated.

Finding the Balance

If we are constantly rediscovering fire, then we won't make progress beyond those who came before us. We'll just keep walking the same road over and over again.

If we don't go through the pain ourselves, then we don't fully appreciate the solutions that we are using. I know that when I was a young programmer, I didn't think that I needed to follow "best practices" (okay, so I really hate that term, but I'll use it anyway). When I got myself into trouble too many times, I finally learned that "best practices" are a good place to start, particularly if I'm new to an environment.

There's a balance somewhere here. A place where we can learn from our own pain, but we can also learn from the people who came before us.

I need to keep this balance in mind for myself and also for the developers that I teach on a regular basis.

Happy Coding!

So here's the question:

"Does the rediscovery make the learning more effective?"The Article

The article in question is from Eirik Tsarpalis (@eiriktsarpalis): F# and Purity. This is a great read, particularly if you've been trying to reconcile functional and imperative programming (like I have). Go read it now.

But as I read the article (and started following some of the links), I found quite a few things that looked familiar.

Down the Rabbit Hole

When I started learning functional programming, I tried to be "pure", meaning that I wanted to use functional concepts all the way down. Eirik points to an article about Fibonacci Sequences by Adam Esterline: Fibonacci Sequence in Haskell. If you've tried to write Fibonacci sequences in Haskell, go read this now.

Haskell by it's nature is a "pure" functional language in that it doesn't let you do those imperative things that we have access to in things like F#. But there are still different ways to implement the Fibonacci sequence.

Adam points to the "Slow but Easy" method using recursion. This looked very familiar, because I created something very similar, thinking that recursion was the "right" solution to this problem.

Here's my code (more on this here: A Functional Fibonacci Sequence (as learned by an imperative programmer)):

This code works, but it is *SLOW*. It takes over 20 seconds to calculate the 35th place. And it just gets slower from there.

Adam shows a similar slowness and points to a solution in Haskell using "zipWith". This is a solution that I ran across as well:

This solution is *FAST*. I thought I understood how this worked (and tried to explain it a bit in my article), but Adam points to a better explanation on StackOverflow from someone who understands how Haskell works: Understanding a Recursively Design List. (You should definitely read this as well.)

Granted, Adam's article was published after mine, but the StackOverflow answer was published years before mine. So this information has been around for a while.

So I struggled through this problem (speed of the recursive implementation), and I asked questions from functional devs who have much more experience than I do. This questioning led me to look for other solutions, and it made me understand that I wasn't "doing it wrong" because I didn't use a standard recursive function implementation.

Popping the Stack

So back to Eirik's article. The core of the article talks about how "pure" functional programming is all based on mathematical functions, but our CPUs and environments aren't really set up to deal with these things. Instead, our environments (in my case, Windows and .NET) are really set up to deal with imperative programming.

The result is that if we program only with pure functions, we often run into issues of performance, heap usage, and even stack overflows (which I'm pretty good at generating).

The solution is a compromise: we keep our functions externally "pure", meaning discrete input leads to deterministic output, no mutation or shared state, etc. But we can use imperative code inside of those functions. We just need to make sure the imperative bits don't leak out of our functions.

Again, this sounded really familiar. When I was working on my Digit Recognition application, I was using code from Mathias Brandewinder's book on machine learning. I ran into some performance issues. The functions that he created were great for batch processing, but I was running the functions in a different way, which meant I was running them over and over again.

Here's the original function (you can read more about it here: Exploring the Digit Recognizer with F#):

To me, this seemed like the "right" way of coding this. It was using the functions on the Array object, and it was piping results from one function to another. But this was *SLOW*.

I had a chance to talk to Mathias about this. I showed him my F# code (above) and also the C# code that I had (which used "for" loop). The C# code was significantly faster. So he said that we could do the same thing in the F# code:

This made things significantly faster. I'm really glad that Mathias recommended this because he is a serious functional programmer, and it was like it gave me permission to cheat.

But when I looked at it more closely, it really wasn't cheating -- for the same reasons that Eirik gives in his article. In this case, there is mutable state inside the function, but that mutation never leaks out. It is contained in the function itself. From the outside, this function is "pure" it takes 2 arrays and returns a deterministic result.

(If you're interested in the Digit Recognizer project, here's where you can find all the articles: Machine Learning (sort of)).

Rediscovering Fire

I feel like quite a bit of my learning in programming has been about rediscovering fire. These are just two examples from the functional programming world. And that brings us back to the original question:

Does the rediscovery make the learning more effective?I know that in my case, going through the pain of finding out that my solution had serious performance issues is what spurred me to look for other solutions.

Pretty much every talk that I do is based on some pain or hurdle that I had in my development career. My goal is to help other developers over those hurdles. I get stuck easily, and I also get easily frustrated. I don't want other folks to get stuck and frustrated.

Finding the Balance

If we are constantly rediscovering fire, then we won't make progress beyond those who came before us. We'll just keep walking the same road over and over again.

If we don't go through the pain ourselves, then we don't fully appreciate the solutions that we are using. I know that when I was a young programmer, I didn't think that I needed to follow "best practices" (okay, so I really hate that term, but I'll use it anyway). When I got myself into trouble too many times, I finally learned that "best practices" are a good place to start, particularly if I'm new to an environment.

There's a balance somewhere here. A place where we can learn from our own pain, but we can also learn from the people who came before us.

I need to keep this balance in mind for myself and also for the developers that I teach on a regular basis.

Happy Coding!

Wednesday, January 25, 2017

Machine Learning Digit Recognizer: Automatically Recognizing Errors

As an ongoing project, I've been working on an application that lets us visualize the results of recognizing hand-written digits (a machine learning project). To see a history, check out the "Machine Learning (sort of)" section in my Functional Programming Articles. You can grab the code from GitHub: jeremybytes/digit-display.

One thing that has kept me from working with this project is that looking for mistakes is tedious. I had to scan through the results which included the bitmap and the computer prediction and determine if it was correct. I had a bit of a brainstorm on how I could automate this process, and that's what this is about.

Here's the result:

All of the "red" marks are done automatically. No more manual error counting.

Using a Single File

The key to getting this to work was to use a single data file instead of two data files.

Originally, I used a separate training set (approximately 42,000 records) and validation set (approximately 28,000 records). These files came from the Kaggle challenge that I talked about way back in my original article (Wow, have I really been looking at this for 2-1/2 years?). Both files contain the bitmap data for the hand-written digits. But the training set also includes a field for the actual value represented.

Rather than using both files, I decided to use the training set for both files. This way, when I could check the actual value to see if the prediction was correct.

There is a bit of a problem, though. If I used the same records for both training and validation, I would end up with 100% accuracy because the records are exactly the same. So the trick was to take a single file and cut out the bit that I wanted to use for the validation set and exclude it from the training set.

Here's an example. Let's say that I had a training set with 20 values:

5, 3, 6, 7, 1, 8, 2, 9, 2, 6, 0, 3, 3, 4, 2, 1, 7, 0, 7, 2, 5

What we can do is carve up the set so that we use some of it for training and some for validation. So, we can use 5 numbers for validation starting at an offset of 2:

5, 3, [6, 7, 1, 8, 2,] 9, 2, 6, 0, 3, 3, 4, 2, 1, 7, 0, 7, 2, 5

This leaves us with 2 separate sets:

Training: 5, 3, 9, 2, 6, 0, 3, 3, 4, 2, 1, 7, 0, 7, 2, 5

Validation: 6, 7, 1, 8, 2

This is obviously a simplified example. In our real file of 42,000 records, we'll be carving out a set of 325 records that we can work with. This still leaves us with lots of training data.

Note: This code is available in the "AutomaticErrorDetection" branch in the GitHub project: AutomaticErrorDetection branch.

Configuration Values

To hold the values, I opted to use the App.config file. I'm not very happy with this solution, but it works. I would much rather be able to select the values in the application itself, but that was troublesome. I'll come back to talk about this a bit later.

Here's App Settings section of the configuration file:

This shows that our training file and data file now point to the same thing ("train.csv"). It also shows that we want to use 325 records for prediction, and that we want to start with record number 1,000 in the file.

Loading Training Data

This means that when we load up the records to use as a training set, we want to take the first 1,000 records, then skip 325 records, and then take the rest.

Here is the original function to load up the training set (in "FunRecognizer.fs")

This just loaded up all of the records in the file.

Here are the updated functions to load up just the parts of the file we want:

First we pull some values out of the configuration file, including the file name, the offset, and the record count. One thing I like here is that we can pipe the values for "offset" and "recordCount" to "Int32.Parse" to convert them from string values to integer values really easily.

Then we load up the data. By using "Array.concat", we can take two separate arrays and combine them into a single array. In this case, we're looking at the data in the file. The first part resolves to "data.[1..1000]" which would give us the first 1000 records. The second part resolves to "data.[1000+325+1..]" which is "data.[1326..]". Since we don't have a second number, this will start at record 1326 and just read to the end of the array (file).

The effect is that when we load up the training set, we skip over 325 records that we can then use as our validation set.

Loading Validation Data

We do something similar on the validation side. When we load up the data, we'll use the same file, and we'll pull out just the 325 records that we want.

Here's the method for that (in "FileLoader.cs"):

This starts by grabbing the values from configuration.

Then using a bit of LINQ, we Skip the first 1,000 records (in the case of the configuration shown above), then we Take 325 records (also based on the configuration). The effect is that we get the 325 records that were *not* used in the training set above.

By doing this, we can use the same file, but we don't have to be concerned that we're using the same records.

Marking Errors

There were a few more changes to allow the marking of errors. We can pull out the actual value from the data in the file and use that to see if our prediction is correct.

I added a parameter to the method that creates all of the UI elements (in "RecognizerControl.xaml.cs"):

The new parameter is "string actual". I'm using a string here rather than an integer because the prediction coming from our digit recognizer is a string.

Then in the body of this method, we just see if the prediction and actual are the same:

If they don't match, then we'll set the background color and increment the number of errors. There is a little more code here, but this gives enough to show what direction we're headed.

The result is that the errors are marked automatically. This saves a huge amount of time (and tedium) since we don't have to mark them manually. (Plus, I was never confident that I caught them all.)

Exploring Data

I wanted the values to be configurable because it's really easy to tune algorithms to work with a particular set of data. I wanted to be able to easily try different sets of data. Even with the simple algorithms that we have in this code, we can see differences.

If we pick an offset of 1,000, we get these results:

But if we pick an offset of 10,000, we get these results:

With the first set of data, the Euclidean Classifier looks a lot more accurate. But with the second set of data, the Manhattan Classifier looks to be more accurate. So I want to be able to try different validation sets to make sure that I'm not tuning things just for specific values.

I also do like the side-by-side comparison. This shows if the errors are the same or different.

Easily Changing Values

In earlier versions of this application, the "Record Count" and "Offset" values on the screen were editable values. This made it really easy to change the values and click "Go" to see the new results. But that's not possible when we're using the configuration file. So why the change?

On my first attempt, I tried to figure out how to get the values from the screen to the relevant parts of the application. This was pretty easy to do in the validation set code, but it got a bit trickier to get it into the training set code.

The training set code is nested several levels deep in the functional code. This meant adding some parameters to the "reader" function that is shown above. But because this is called within a function that is called within a function that is called within a function, how could I get those values in there?

I tried adding a parameter and then bubbling that parameter up. This became problematic from a syntactical standpoint, but I also wasn't happy exposing those values in that way. It seemed very "leaky".

So an easy way to fix this was to store the values in a central location that everyone could access. And that's why I created the configuration file.

Future Updates

Now that I have this working, I'm going to do a bit more experimentation. I would like to have the ability to change the values without having to restart the application. So, I'm going to put back the editable text boxes and see if I can work with a separate object to hold these values.

This would have to be in a separate project to prevent circular dependencies. And I would also want to figure out how to use the same immutable object for the training code and validation code. This will ensure that both are using the same values. If they use different values, then things will fall apart pretty quickly.

Wrap Up

It's always fun to play and experiment with different things. Now that I've made this application easier to work with (and much less tedious), I'm more likely to explore different algorithms. I've done a little bit of experimentation in the past, but it will be easier to see results now.

As I continue, I'll look at converting more of this code to F# (I still need more practice with that). And we'll see if we can teach the computer to get better at recognizing those hand-written digits.

Happy Coding!

One thing that has kept me from working with this project is that looking for mistakes is tedious. I had to scan through the results which included the bitmap and the computer prediction and determine if it was correct. I had a bit of a brainstorm on how I could automate this process, and that's what this is about.

Here's the result:

All of the "red" marks are done automatically. No more manual error counting.

Using a Single File

The key to getting this to work was to use a single data file instead of two data files.

Originally, I used a separate training set (approximately 42,000 records) and validation set (approximately 28,000 records). These files came from the Kaggle challenge that I talked about way back in my original article (Wow, have I really been looking at this for 2-1/2 years?). Both files contain the bitmap data for the hand-written digits. But the training set also includes a field for the actual value represented.

Rather than using both files, I decided to use the training set for both files. This way, when I could check the actual value to see if the prediction was correct.

There is a bit of a problem, though. If I used the same records for both training and validation, I would end up with 100% accuracy because the records are exactly the same. So the trick was to take a single file and cut out the bit that I wanted to use for the validation set and exclude it from the training set.

Here's an example. Let's say that I had a training set with 20 values:

5, 3, 6, 7, 1, 8, 2, 9, 2, 6, 0, 3, 3, 4, 2, 1, 7, 0, 7, 2, 5

What we can do is carve up the set so that we use some of it for training and some for validation. So, we can use 5 numbers for validation starting at an offset of 2:

5, 3, [6, 7, 1, 8, 2,] 9, 2, 6, 0, 3, 3, 4, 2, 1, 7, 0, 7, 2, 5

This leaves us with 2 separate sets:

Training: 5, 3, 9, 2, 6, 0, 3, 3, 4, 2, 1, 7, 0, 7, 2, 5

Validation: 6, 7, 1, 8, 2

This is obviously a simplified example. In our real file of 42,000 records, we'll be carving out a set of 325 records that we can work with. This still leaves us with lots of training data.

Note: This code is available in the "AutomaticErrorDetection" branch in the GitHub project: AutomaticErrorDetection branch.

Configuration Values

To hold the values, I opted to use the App.config file. I'm not very happy with this solution, but it works. I would much rather be able to select the values in the application itself, but that was troublesome. I'll come back to talk about this a bit later.

Here's App Settings section of the configuration file:

This shows that our training file and data file now point to the same thing ("train.csv"). It also shows that we want to use 325 records for prediction, and that we want to start with record number 1,000 in the file.

Loading Training Data

This means that when we load up the records to use as a training set, we want to take the first 1,000 records, then skip 325 records, and then take the rest.

Here is the original function to load up the training set (in "FunRecognizer.fs")

|

| Original Loader - All Records |

This just loaded up all of the records in the file.

Here are the updated functions to load up just the parts of the file we want:

|

| New Loader - Skip Over the Records to be Validated |

First we pull some values out of the configuration file, including the file name, the offset, and the record count. One thing I like here is that we can pipe the values for "offset" and "recordCount" to "Int32.Parse" to convert them from string values to integer values really easily.

Then we load up the data. By using "Array.concat", we can take two separate arrays and combine them into a single array. In this case, we're looking at the data in the file. The first part resolves to "data.[1..1000]" which would give us the first 1000 records. The second part resolves to "data.[1000+325+1..]" which is "data.[1326..]". Since we don't have a second number, this will start at record 1326 and just read to the end of the array (file).

The effect is that when we load up the training set, we skip over 325 records that we can then use as our validation set.

Loading Validation Data

We do something similar on the validation side. When we load up the data, we'll use the same file, and we'll pull out just the 325 records that we want.

Here's the method for that (in "FileLoader.cs"):

This starts by grabbing the values from configuration.

Then using a bit of LINQ, we Skip the first 1,000 records (in the case of the configuration shown above), then we Take 325 records (also based on the configuration). The effect is that we get the 325 records that were *not* used in the training set above.

By doing this, we can use the same file, but we don't have to be concerned that we're using the same records.

Marking Errors

There were a few more changes to allow the marking of errors. We can pull out the actual value from the data in the file and use that to see if our prediction is correct.

I added a parameter to the method that creates all of the UI elements (in "RecognizerControl.xaml.cs"):

The new parameter is "string actual". I'm using a string here rather than an integer because the prediction coming from our digit recognizer is a string.

Then in the body of this method, we just see if the prediction and actual are the same:

If they don't match, then we'll set the background color and increment the number of errors. There is a little more code here, but this gives enough to show what direction we're headed.

The result is that the errors are marked automatically. This saves a huge amount of time (and tedium) since we don't have to mark them manually. (Plus, I was never confident that I caught them all.)

Exploring Data

I wanted the values to be configurable because it's really easy to tune algorithms to work with a particular set of data. I wanted to be able to easily try different sets of data. Even with the simple algorithms that we have in this code, we can see differences.

If we pick an offset of 1,000, we get these results:

But if we pick an offset of 10,000, we get these results:

With the first set of data, the Euclidean Classifier looks a lot more accurate. But with the second set of data, the Manhattan Classifier looks to be more accurate. So I want to be able to try different validation sets to make sure that I'm not tuning things just for specific values.

I also do like the side-by-side comparison. This shows if the errors are the same or different.

Easily Changing Values

In earlier versions of this application, the "Record Count" and "Offset" values on the screen were editable values. This made it really easy to change the values and click "Go" to see the new results. But that's not possible when we're using the configuration file. So why the change?

On my first attempt, I tried to figure out how to get the values from the screen to the relevant parts of the application. This was pretty easy to do in the validation set code, but it got a bit trickier to get it into the training set code.

The training set code is nested several levels deep in the functional code. This meant adding some parameters to the "reader" function that is shown above. But because this is called within a function that is called within a function that is called within a function, how could I get those values in there?

I tried adding a parameter and then bubbling that parameter up. This became problematic from a syntactical standpoint, but I also wasn't happy exposing those values in that way. It seemed very "leaky".

So an easy way to fix this was to store the values in a central location that everyone could access. And that's why I created the configuration file.

Future Updates

Now that I have this working, I'm going to do a bit more experimentation. I would like to have the ability to change the values without having to restart the application. So, I'm going to put back the editable text boxes and see if I can work with a separate object to hold these values.

This would have to be in a separate project to prevent circular dependencies. And I would also want to figure out how to use the same immutable object for the training code and validation code. This will ensure that both are using the same values. If they use different values, then things will fall apart pretty quickly.

Wrap Up

It's always fun to play and experiment with different things. Now that I've made this application easier to work with (and much less tedious), I'm more likely to explore different algorithms. I've done a little bit of experimentation in the past, but it will be easier to see results now.

As I continue, I'll look at converting more of this code to F# (I still need more practice with that). And we'll see if we can teach the computer to get better at recognizing those hand-written digits.

Happy Coding!

Monday, August 15, 2016

Recognizing Hand-Written Digits: Getting Worse Before Getting Better

I took a stab at improving some machine-learning functions for recognizing hand-written digits. I actually made things less accurate, but it's pointing in a promising direction.

It's been a long time since I first took a look at recognizing hand-written digits using machine learning. Back when I first ran across the problem, I had no idea where to start. So instead of doing the machine learning bits, I did some visualization instead.

Then I got my hands on Mathias Brandewinder's book Machine Learning for .NET Developers, and he showed some basics that I incorporated into my visualization. I still didn't know where to go from there. Recently, I've been doing some more F# exploration, and that inspired some ideas on how I might improve the digit recognizers.

To take a look at the history of the Digit Display & Recognition project, check out the "Machine Learning (sort of)" articles listed here: Jeremy Explores Functional Programming.

Blurring the Results

My first stab at trying to improve the recognizers came from reading Tomas Petricek's book Real-World Functional Programming. In the book, he shows a simple function for "blurring" an array:

There's a lot going on here, and I won't walk through it. But this takes a array of values and then averages each item with its neighbors.

Here's an example that creates an array of random values and then runs it through the "blurArray" function:

If we look at the output, the first array is a set of random numbers. The second output shows the result of running it through our blur function one time.

The last result shows the result of running through the blur function three times. And we can see that the values get "smoother" (or "blurrier") with each step.

Applying Blur to the Digit Recognizer

When I saw this, I thought of the digit recognition problem. Our data was simply an array of numbers. What would happen if I ran a similar "blur" over the digit data?

Note: this code is available in the "BlurClassifier" branch of the "digit-display" project on GitHub: jeremybytes/digit-display "Blur Classifier".

The reason I thought of this is because the current algorithms are doing strict comparisons between 2 images (one pixel at a time). But if the images are offset (meaning translated horizontally or vertically by several pixels), then the current recognizers would not pick it up. If I added a "blur", then it's possible that it would account for situations like this.

Blurring the Data

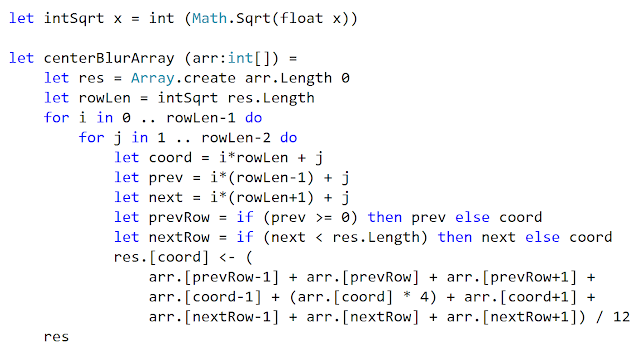

Here's my function to blur the data that we have:

This is a bit more complex than the function we have above. That's because we're really dealing with 2-dimensional data. Each pixel has 8 adjacent pixels (including the row above and below).

I won't go into the details here. I skipped over the edges to make things a bit simpler, and I also weighted the "center" pixel so that it was averaged in 4 times more than the other pixels.

The New Distance Function

With this in place, I could create a new "distance" function:

This takes 2 pixel arrays, blurs them, and then passes them to our Manhattan Distance function that we already have in place. This means that we can do a direct comparison between our Manhattan Distance recognizer and our new Blur Distance recognizer.

The Results

Unfortunately, the results were less than stellar. Here's the output using our Digit Display application:

Note: When comparing the results, the numbers aren't in the same order due to the parallelization in the application. But they should be in the same general area in both sets of data.

There is both good and bad in the results. The good news is that we correctly identified several of the digits that the Manhattan Classifier got wrong.

The bad news is that there are new errors that the original classifier got right. But even with the new errors, it didn't perform any "worse" overall than the original. That tells me that there may be some good things that we can grab from this technique.

But now let's look at another approach.

Adding Some Weight

The other idea that I came up with had to do with how the "best" match was selected. Here's the basic function:

This runs the "distance" function (the "dist" right in the middle) to compare our target item against every item in the training set. In the distance calculation, smaller is better, so this just takes the smallest one that it can find.

But the "best" match isn't always the correct one. So I came up with the idea of looking at the 5 closest matches to come up with a consensus.

Note: this code is available in the "WeightedClassification" branch of the "digit-display" project on GitHub: jeremybytes/digit-display "Weighted Classification".

Here's that function:

This has quite a few steps to it. There's probably a much shorter way of doing this, but this makes it easy to run step-by-step using F# Interactive.

Instead of pulling the smallest value (using "minBy" in the original), it gets the 5 smallest values. It looks something like this (there's some bits left out to make it more readable):

Then it counts up how many of each value. In this case, we have three 6s and two 5s. Then it pulled out the one with the most values in the list. (And 6 is correct in this case.)

To put this into the application, I composed the functions a bit differently to come up with a "weighted" classifier that still used the Manhattan Distance.

The results were not very good:

This actually makes things less accurate overall. But there are some promising items by looking at these results.

First, several of the items that the standard Manhattan Classifier got wrong were correctly identified by the weighted classifier. This did reinforce that the smallest number was not always the correct number.

But there were also a lot of items that this new classifier identified incorrectly. So overall, the performance was worse than the original.

More Refinement

Although this looks like a failure, I think I'm actually headed in the right direction. One thing that I can do to make this more accurate is to add a true "weight" to the calculation. Here's another example from our current approach:

If we look at these values, the distance calculations are fairly close together (within about 1500 of each other.) In this case, we can pretty confidently take the one with the "most" values (which is 2 in this case).

But compare that to this:

Here we have a much bigger gap between our best value and our worst value (over 5000). And there is even a big gap between the first value and the next best value (over 4000). Because of this, I really want to weight the first value higher. A simple consensus doesn't work in this case (especially since we have a "tie").

So even though we get worse results with the current implementation, I think this really shows some promise.

If I can add some "weight" to each value (rather than simply counting them), I think it can improve the accuracy by eliminating some of the outliers in the data.

Wrap Up

I really like having the visualization for what the machine-learning algorithms are doing. This gives me a good idea of where things are going right and where they are going wrong. This is not something that I could get just from looking at "percentage correct" values.

These two approaches to improving the results didn't have the intended effect. But because we could see where they went right and where they went wrong, it's possible to refine these into something better.

I'll be working on adding actual weights to the weighted classifier. I think this holds the most promise right now. And maybe adding a bit of "blur" will help as well. More experimentation is needed. That means more fun for me to explore!

Happy Coding!

It's been a long time since I first took a look at recognizing hand-written digits using machine learning. Back when I first ran across the problem, I had no idea where to start. So instead of doing the machine learning bits, I did some visualization instead.

Then I got my hands on Mathias Brandewinder's book Machine Learning for .NET Developers, and he showed some basics that I incorporated into my visualization. I still didn't know where to go from there. Recently, I've been doing some more F# exploration, and that inspired some ideas on how I might improve the digit recognizers.

To take a look at the history of the Digit Display & Recognition project, check out the "Machine Learning (sort of)" articles listed here: Jeremy Explores Functional Programming.

Blurring the Results

My first stab at trying to improve the recognizers came from reading Tomas Petricek's book Real-World Functional Programming. In the book, he shows a simple function for "blurring" an array:

There's a lot going on here, and I won't walk through it. But this takes a array of values and then averages each item with its neighbors.

Here's an example that creates an array of random values and then runs it through the "blurArray" function:

If we look at the output, the first array is a set of random numbers. The second output shows the result of running it through our blur function one time.

The last result shows the result of running through the blur function three times. And we can see that the values get "smoother" (or "blurrier") with each step.

Applying Blur to the Digit Recognizer

When I saw this, I thought of the digit recognition problem. Our data was simply an array of numbers. What would happen if I ran a similar "blur" over the digit data?

Note: this code is available in the "BlurClassifier" branch of the "digit-display" project on GitHub: jeremybytes/digit-display "Blur Classifier".

The reason I thought of this is because the current algorithms are doing strict comparisons between 2 images (one pixel at a time). But if the images are offset (meaning translated horizontally or vertically by several pixels), then the current recognizers would not pick it up. If I added a "blur", then it's possible that it would account for situations like this.

Blurring the Data

Here's my function to blur the data that we have:

This is a bit more complex than the function we have above. That's because we're really dealing with 2-dimensional data. Each pixel has 8 adjacent pixels (including the row above and below).

I won't go into the details here. I skipped over the edges to make things a bit simpler, and I also weighted the "center" pixel so that it was averaged in 4 times more than the other pixels.

The New Distance Function

With this in place, I could create a new "distance" function:

This takes 2 pixel arrays, blurs them, and then passes them to our Manhattan Distance function that we already have in place. This means that we can do a direct comparison between our Manhattan Distance recognizer and our new Blur Distance recognizer.

The Results

Unfortunately, the results were less than stellar. Here's the output using our Digit Display application:

Note: When comparing the results, the numbers aren't in the same order due to the parallelization in the application. But they should be in the same general area in both sets of data.

There is both good and bad in the results. The good news is that we correctly identified several of the digits that the Manhattan Classifier got wrong.

The bad news is that there are new errors that the original classifier got right. But even with the new errors, it didn't perform any "worse" overall than the original. That tells me that there may be some good things that we can grab from this technique.

But now let's look at another approach.

Adding Some Weight

The other idea that I came up with had to do with how the "best" match was selected. Here's the basic function:

This runs the "distance" function (the "dist" right in the middle) to compare our target item against every item in the training set. In the distance calculation, smaller is better, so this just takes the smallest one that it can find.

But the "best" match isn't always the correct one. So I came up with the idea of looking at the 5 closest matches to come up with a consensus.

Note: this code is available in the "WeightedClassification" branch of the "digit-display" project on GitHub: jeremybytes/digit-display "Weighted Classification".

This has quite a few steps to it. There's probably a much shorter way of doing this, but this makes it easy to run step-by-step using F# Interactive.

Instead of pulling the smallest value (using "minBy" in the original), it gets the 5 smallest values. It looks something like this (there's some bits left out to make it more readable):

Then it counts up how many of each value. In this case, we have three 6s and two 5s. Then it pulled out the one with the most values in the list. (And 6 is correct in this case.)

To put this into the application, I composed the functions a bit differently to come up with a "weighted" classifier that still used the Manhattan Distance.

The results were not very good:

This actually makes things less accurate overall. But there are some promising items by looking at these results.

First, several of the items that the standard Manhattan Classifier got wrong were correctly identified by the weighted classifier. This did reinforce that the smallest number was not always the correct number.

But there were also a lot of items that this new classifier identified incorrectly. So overall, the performance was worse than the original.

More Refinement

Although this looks like a failure, I think I'm actually headed in the right direction. One thing that I can do to make this more accurate is to add a true "weight" to the calculation. Here's another example from our current approach:

If we look at these values, the distance calculations are fairly close together (within about 1500 of each other.) In this case, we can pretty confidently take the one with the "most" values (which is 2 in this case).

But compare that to this:

Here we have a much bigger gap between our best value and our worst value (over 5000). And there is even a big gap between the first value and the next best value (over 4000). Because of this, I really want to weight the first value higher. A simple consensus doesn't work in this case (especially since we have a "tie").

So even though we get worse results with the current implementation, I think this really shows some promise.

If I can add some "weight" to each value (rather than simply counting them), I think it can improve the accuracy by eliminating some of the outliers in the data.

Wrap Up

I really like having the visualization for what the machine-learning algorithms are doing. This gives me a good idea of where things are going right and where they are going wrong. This is not something that I could get just from looking at "percentage correct" values.

These two approaches to improving the results didn't have the intended effect. But because we could see where they went right and where they went wrong, it's possible to refine these into something better.

I'll be working on adding actual weights to the weighted classifier. I think this holds the most promise right now. And maybe adding a bit of "blur" will help as well. More experimentation is needed. That means more fun for me to explore!

Happy Coding!

Sunday, August 14, 2016

Recognizing Hand-Written Digits: Easier Side-By-Side Comparison

I've been working some more on my code that recognizes hand-written digits. I've actually been trying out a few different approaches to try to improve the machine learning algorithm. But before talking about those, we'll take a look at a few improvements that I've made to the UI.

Note: Articles in this series are collected under the "Machine Learning (sort of)" heading here: Jeremy Explores Functional Programming.

New Features

I added a couple of features to the application. Here's a screenshot:

This code is available on GitHub in the "DetailedComparison" branch of the "digit-display" project: jeremybytes/digit-display "DetailedComparison". (This has been rolled into the "master" branch as well, but the code there may have been updated by the time you read this.)

Record Count

There's now an input field for the number of records to process. Previously, this was a value in the code. This makes it much easier to pick new values based on the screen size.

(Note: I just noticed the typo in the header. D'oh.)

Offset

Previously, we were only able to use records from the beginning of our data set. This offset allows us to start at an arbitrary location in our data file (we'll see why this is important in just a bit).

"Go" Button

Instead of processing things when the application starts up, we now have a button to kick things off. This also means that we can change the parameters and re-run the process without needing to restart the application.

Separate Duration

Each classifier now has it's own duration timer. (The previous version just had a single timer for the entire process.)

Error Counts

This is actually the same as our previous version. But I wanted to point out that we can go through and click on the incorrect items. This changes the color of the item and increments our error count. This makes it easy to compare our two different recognizers.

This is still a manual process. We need a human to make a determination on whether the computer was correct. If I can't make out the hand-written digit, then I do not count it as an error.

Different Offsets

I wanted to have the offset available because I knew that if you keep trying to improve a recognizer against a small dataset, eventually you get really good at that small set. But that doesn't necessarily translate into being a good general-purpose recognizer.

I had done some experimentation by changing the offset in code. But having the parameter available makes things much easier. Here's an example of how different data makes a big difference:

Both of our recognizers performed significantly better with this set of data (which starts at item 500) compared to the data above (which starts at 0).

This is why it's important for us to look at different sets of data. When we start changing things, it may get better in some areas but worse in others.

Code Updates

I made a bit of a significant change in the UI: I created a user control to run the recognizer and process the data. This moved a bunch of code out of the main form, and it also reduced quite a bit of the duplication.

The application displays 2 of the user controls side-by-side, but we could display 3 or more (as many as we'd like, really). The user control makes that really easy.

Here's the code behind the "Go" button:

In our UI, we have 2 panels: LeftPanel and RightPanel. We start by clearing these out.

Then we grab the data. Since we have the parameters in the UI, I figured it was best to get the data in this central location (and only do it one time), and then we can pass the data to each of our user controls. The "LoadDataStrings" method grabs the data from the file based on our parameters.

Then we create 2 "RecognizerControl" objects (this is our user control). This has three parameters: (1) the string for the header, (2) the classifier (our recognizer), and (3) the data that will be processed.

We just create a user control for each of our recognizers and then add them to the panels in the UI. I'll be tweaking this part a bit in the future, but this works for us for now.

As a reminder, this code is available on GitHub in the "DetailedComparison" branch of the "digit-display" project: jeremybytes/digit-display "DetailedComparison".

Wrap Up

These changes aren't real exciting. But they do put us in a place that makes it very easy for us to swap out different recognizers. I originally wanted to add some drop-downs so that we could pick different recognizers, but I wanted to prioritize some other things before tweaking the UI further. That may get added in the future.

I've been playing with a couple of ideas to improve the recognizers. These have been inspired by some of the F# reading that I've been doing as well as some ideas of my own. We'll take a look at those next time.

[Update 08/15/2016: Here's the experimentation with those ideas: Getting Worse Before Getting Better.]

Happy Coding!

Note: Articles in this series are collected under the "Machine Learning (sort of)" heading here: Jeremy Explores Functional Programming.

New Features

I added a couple of features to the application. Here's a screenshot:

This code is available on GitHub in the "DetailedComparison" branch of the "digit-display" project: jeremybytes/digit-display "DetailedComparison". (This has been rolled into the "master" branch as well, but the code there may have been updated by the time you read this.)

Record Count

There's now an input field for the number of records to process. Previously, this was a value in the code. This makes it much easier to pick new values based on the screen size.

(Note: I just noticed the typo in the header. D'oh.)

Offset

Previously, we were only able to use records from the beginning of our data set. This offset allows us to start at an arbitrary location in our data file (we'll see why this is important in just a bit).

"Go" Button

Instead of processing things when the application starts up, we now have a button to kick things off. This also means that we can change the parameters and re-run the process without needing to restart the application.

Separate Duration

Each classifier now has it's own duration timer. (The previous version just had a single timer for the entire process.)

Error Counts

This is actually the same as our previous version. But I wanted to point out that we can go through and click on the incorrect items. This changes the color of the item and increments our error count. This makes it easy to compare our two different recognizers.

This is still a manual process. We need a human to make a determination on whether the computer was correct. If I can't make out the hand-written digit, then I do not count it as an error.

Different Offsets

I wanted to have the offset available because I knew that if you keep trying to improve a recognizer against a small dataset, eventually you get really good at that small set. But that doesn't necessarily translate into being a good general-purpose recognizer.

I had done some experimentation by changing the offset in code. But having the parameter available makes things much easier. Here's an example of how different data makes a big difference:

Both of our recognizers performed significantly better with this set of data (which starts at item 500) compared to the data above (which starts at 0).

This is why it's important for us to look at different sets of data. When we start changing things, it may get better in some areas but worse in others.

Code Updates

I made a bit of a significant change in the UI: I created a user control to run the recognizer and process the data. This moved a bunch of code out of the main form, and it also reduced quite a bit of the duplication.

The application displays 2 of the user controls side-by-side, but we could display 3 or more (as many as we'd like, really). The user control makes that really easy.

Here's the code behind the "Go" button:

In our UI, we have 2 panels: LeftPanel and RightPanel. We start by clearing these out.

Then we grab the data. Since we have the parameters in the UI, I figured it was best to get the data in this central location (and only do it one time), and then we can pass the data to each of our user controls. The "LoadDataStrings" method grabs the data from the file based on our parameters.

Then we create 2 "RecognizerControl" objects (this is our user control). This has three parameters: (1) the string for the header, (2) the classifier (our recognizer), and (3) the data that will be processed.

We just create a user control for each of our recognizers and then add them to the panels in the UI. I'll be tweaking this part a bit in the future, but this works for us for now.

As a reminder, this code is available on GitHub in the "DetailedComparison" branch of the "digit-display" project: jeremybytes/digit-display "DetailedComparison".

Wrap Up

These changes aren't real exciting. But they do put us in a place that makes it very easy for us to swap out different recognizers. I originally wanted to add some drop-downs so that we could pick different recognizers, but I wanted to prioritize some other things before tweaking the UI further. That may get added in the future.

I've been playing with a couple of ideas to improve the recognizers. These have been inspired by some of the F# reading that I've been doing as well as some ideas of my own. We'll take a look at those next time.

[Update 08/15/2016: Here's the experimentation with those ideas: Getting Worse Before Getting Better.]

Happy Coding!

Friday, July 29, 2016

Discover the Good: Object Oriented & Functional Programming

I've been spending a lot of time this month with functional programming - F# in particular. And I've been exploring functional programming for a while now (I just collected the articles, and there are more than I remembered).

Since I've written quite a bit this month, it has spurred some good conversations. I wanted to expand a bit on a question from Scott Nimrod (@Bizmonger).

As a side note, I got to meet Scott in person at NDC London back in January.

Are You Done with OO?

Here's a question Scott asked me a few days ago (Twitter link):

I've done Object-Oriented Programming for many years, and I've been successful with it. I've done Functional Programming for a much shorter time; and I really like it. But I don't see one paradigm replacing the other in my world.

These are different techniques with different strengths.

Discovering the Good

The thing I've been most impressed about in the Functional Programming community is the emphasis on discovering the good. And I've written about this before: An Outsider's View of the Functional Community.

Rather than getting caught up in "my language is better than your language", the people I know are more interested in the what each does well. When we find out what each tool is really good at, we know what to reach for when a particular problem comes up in our environment.

Sporadic Discouragement

Even though there is an overwhelming amount of positive that I see in the functional community, every so often, I'll come across a really depressing article like "Why I'll Never Do OO Programming Again". This is often a rant about failures of projects and problems that arose in their environment.

And this makes me really sad.

Object Oriented Programming has served a good purpose over the years. And it will continue to do that.

I Really Love Object Oriented Programming

Object-Oriented programming techniques have enabled a lot of successes in my career. There are situations where having state and behavior travel together works very well.

I've programmed a lot of line-of-business applications over the years -- and having a mutable object that knows how to validate itself based on a set of rules is really great in that environment. Encapsulation is great for exposing behaviors to the outside world but hiding the implementation. When you combine this with objects that are easily data-bound to a UI, then things get even better.

Object-oriented programming is the right technique for a lot of situations.

It's also the wrong technique for others. It's difficult to multi-thread processes when there is shared, mutable state. But that's okay. When we recognize that, we can look for other techniques.

I Really Love Functional Programming

Functional programming techniques are awesome. I managed to pick up on a lot of functional-ish ideas in my C# programming with delegates, lambdas, and LINQ. I didn't realize it at the time, but these were pushing me toward a better understanding of functional programming.

By having functions with discrete inputs (parameters) and discrete outputs (return) with no shared state, things get *extremely* easy to parallelize. And I've used this techniques in certain areas to get precisely this advantage.

Functional programming is the right technique for a lot of situations.

It's also the wrong technique for others. Data-binding immutable objects doesn't make sense since data-binding is all about notification of state change. But that's okay. When we recognize that, we can look for other techniques.

There's Enough Love to Go Around

I purposely stayed away from referring directly to languages here. In the .NET world, C# is an object-oriented language, but it also has a lot of features that enable functional programming. At the same time, F# is a functional language, but it has features that enable objected oriented programming (particularly since it needs to interop with .NET libraries that are OO-focused).

Keep learning; keep expanding; keep experimenting. What's best for your environment isn't necessarily what's best for my environment. And that's okay.

Happy Coding!

Since I've written quite a bit this month, it has spurred some good conversations. I wanted to expand a bit on a question from Scott Nimrod (@Bizmonger).

As a side note, I got to meet Scott in person at NDC London back in January.

Are You Done with OO?

Here's a question Scott asked me a few days ago (Twitter link):

I've done Object-Oriented Programming for many years, and I've been successful with it. I've done Functional Programming for a much shorter time; and I really like it. But I don't see one paradigm replacing the other in my world.

These are different techniques with different strengths.

Discovering the Good

The thing I've been most impressed about in the Functional Programming community is the emphasis on discovering the good. And I've written about this before: An Outsider's View of the Functional Community.

Rather than getting caught up in "my language is better than your language", the people I know are more interested in the what each does well. When we find out what each tool is really good at, we know what to reach for when a particular problem comes up in our environment.

Sporadic Discouragement

Even though there is an overwhelming amount of positive that I see in the functional community, every so often, I'll come across a really depressing article like "Why I'll Never Do OO Programming Again". This is often a rant about failures of projects and problems that arose in their environment.

And this makes me really sad.

Object Oriented Programming has served a good purpose over the years. And it will continue to do that.

I Really Love Object Oriented Programming

Object-Oriented programming techniques have enabled a lot of successes in my career. There are situations where having state and behavior travel together works very well.

I've programmed a lot of line-of-business applications over the years -- and having a mutable object that knows how to validate itself based on a set of rules is really great in that environment. Encapsulation is great for exposing behaviors to the outside world but hiding the implementation. When you combine this with objects that are easily data-bound to a UI, then things get even better.

Object-oriented programming is the right technique for a lot of situations.

It's also the wrong technique for others. It's difficult to multi-thread processes when there is shared, mutable state. But that's okay. When we recognize that, we can look for other techniques.

I Really Love Functional Programming

Functional programming techniques are awesome. I managed to pick up on a lot of functional-ish ideas in my C# programming with delegates, lambdas, and LINQ. I didn't realize it at the time, but these were pushing me toward a better understanding of functional programming.

By having functions with discrete inputs (parameters) and discrete outputs (return) with no shared state, things get *extremely* easy to parallelize. And I've used this techniques in certain areas to get precisely this advantage.

Functional programming is the right technique for a lot of situations.

It's also the wrong technique for others. Data-binding immutable objects doesn't make sense since data-binding is all about notification of state change. But that's okay. When we recognize that, we can look for other techniques.

There's Enough Love to Go Around

I purposely stayed away from referring directly to languages here. In the .NET world, C# is an object-oriented language, but it also has a lot of features that enable functional programming. At the same time, F# is a functional language, but it has features that enable objected oriented programming (particularly since it needs to interop with .NET libraries that are OO-focused).

Object-Oriented Programming and Functional Programming are both awesome. They just happen to be awesome at different things.We should really strive to use the right tool for the job. This means that we keep learning new things to add to our toolbox. I don't throw out my angle grinder because I got a new cordless drill. And I don't throw out perfectly working programming techniques just because I pick up a new one.

Keep learning; keep expanding; keep experimenting. What's best for your environment isn't necessarily what's best for my environment. And that's okay.

Happy Coding!

Tuesday, July 26, 2016

Sequences vs. Lists in F# (with Euler Problems #7, #8, #9, and #10)

In going through the Euler Problems in F#, I've found myself using lists and sequences a lot. Probably because I'm comfortable with the map, filter, collect, sum and similar functions because they are so much like the functions I love in LINQ in C#.

This means that my solutions have tended to be a bit of "brute force". But I have done some refinement later; this will continue.

I ended up working on the next set of Euler Problems at the same time -- bouncing between them and sharing ideas where I could. I ended up with solutions that were reasonably performant. But I picked up something else from this process:

[Update: Collected articles for the first 10 Euler Problems in F# are available here: Jeremy Explores Functional Programming.]

Euler Problem #7

Here's Euler Problem #7:

And here's running the function with the target value:

This is a bit of a brute force solution. The "isPrime" determines whether there are any factors of a number. This is what we used when looking at Euler Problem #3 (the "factor" function), but then I added the "Seq.isEmpty" check on to the end. If there are no factors, then the number is prime.

Why Sequence is Important Here

The reason that using a sequence is important here is that sequences are lazy-evaluated -- similar to how IEnumerable in C# is only evaluated once you start enumerating through it.

So when we use "isEmpty", this pulls the first item in the sequence (and only the first item). If it finds an item, then it short-circuits the evaluation of the rest of the sequence. So, we don't actually end up calculating all the factors for a number, we only calculate the *first* factor. If it exists, then the number is not prime, and we return false. If there are no factors, then the number is prime, and we return true.

Why the "match"?

In the "euler7" function, we use pattern matching on our number. The reason is that I wanted to do a bit of optimization.

The second pattern uses another sequence. It goes from 3 to 1 million. But I specified "[3..2..1000000]", The middle term means "step 2", so we're only taking every other number in this sequence. This skips all of the even numbers (which we know are not prime), and cuts our processing time in half.

But we have one exception: "2" (an even number) is prime. So, I manually add a pattern for that in case someone asks for the first prime number.

Why Sequence is Important Here (Again)

You'll notice that we're using a sequence in the "euler7" function as well. This is because we ask for a particular "item". This will evaluate the sequence up to that item (and no further).

The "n-2" looks odd. That's because "item" is zero-based. So to get the 10001st prime, we need to get the item at index 10000. But we also skipped "2" in this sequence, so we need to subtract one more number from our index to get an accurate match.

Performance

It takes 18 seconds to evaluate the 10,001st prime number. That's not too bad for a brute force attack. There's sure to be a better mathematical approach to this problem, but I haven't researched that yet.

Euler Problem #8

Let's move on to Euler Problem #8:

My solution starts out by treating this as a giant string:

When we run this to find the 13 numbers that produce the largest product, we get this result:

Just a warning when you're looking for Euler solutions online. For this particular problem, there are different target values. This one uses 13, but there are also solutions that are looking for 5 adjacent numbers or another variation.

We don't need to treat the original number as a number, we can treat it as a string. What I decided to do was create a 13-character "window" on the string that I could slide through the entire 1000 characters.

So the "windowProduct" function takes a starting index and the size of the window (13 in our case), takes those characters and turns them into integers, and then multiplies them together.

A couple notes: I extracted out a "charToInt64" function to make converting a character to a number a bit more obvious. And I'm using 64-bit values here because our product will overflow a 32-bit integer.

For the "euler8" function, I create a sequence that includes all of the possible windows in our 1000-digit number. It finds the product of all those numbers, and picks out the biggest one.

Why Sequence is Important Here

The sequence inside "windowProduct" is important because we're dealing with a string here. In C#, a string implements the IEnumerable<char> interface; in F#, string is a "seq<char>". Since we already have a sequence, we'll just keep working with it.

Why List is Important Here

Inside the "euler8" function, we use a list rather than a sequence. The reason is that we need all of the possible values in order to find the "max" value. This means that all of the items need to be evaluated. Because of this (and the fixed size of the collection), a list is a bit more efficient here.

Performance is good (less than a 10th of a second), but in my (extremely unscientific) tests, using a sequence in this function took about twice as long. With these particular values, it's not a significant difference. But it's something to keep in mind.

Euler Problem #9

So let's move on to Euler Problem #9:

This does give the correct answer:

(And in case you're wondering, the three values are 200, 375, and 425.)

The "squareList" is a list that contains the squares for the numbers from 1 to half of our target value (I used half because it sounded reasonable since we were adding and squaring numbers in various ways -- I can't say that I have a mathematical proof for why this is a good value).

The "collect" function creates a Cartesian product of 2 sets of these lists of squares (similar to what we did for Euler Problem #4). This creates all of the permutations of values -- adding each square together and recording the value. The result is a list of tuples with the first square, the second square, and the sum of the two squares.

The "filter" compares the sum of the two squares against our "squareList" (since this is a list of known squares). It hangs on to only those values where the sum is also a square.

The "map" takes the square root of all of our values (yes, I know this isn't very efficient, but when I was trying to come up with a data structure that held both the squares and the original values, I ended up with a bit of a mess).

The "find" function gets the values where the original (non-squared) values add up to our target (1000).

Finally, we have the "prodTuple" function which will multiply the 3 values in our tuple together to get us the ultimate result.

Why Lists are Important Here

Like with our previous example, we have a fixed number of values (the Cartesian product of all of the squares from 1 to half of our target). Since this is a fixed size, and we're evaluating everything, a sequence wouldn't buy us any short-circuiting advantages.

Performance

The performance is okay. It takes a little over a second to evaluate this. Again, there is probably a better mathematical approach to this. But computers are very good a brute-forcing things (that's why they're so good at playing chess).

Euler Problem #10

Finally today, we have Euler Problem #10:

And when we run this with our target value, we get the correct answer:

This isn't my first try at this. My first try was a brute force approach. That didn't come out so well:

It worked, but it took almost an hour and a half to complete. This is really not acceptable.

Sieve of Eratosthenes

To get acceptable performance, I abandoned the brute-force approach and used something known as the Sieve of Eratosthenes. This is really good at finding the prime numbers below a certain value.

The short version is that we can eliminate all values that are multiples of a prime. Let's take the values from 2 to 20:

[2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20]

First, we can eliminate everything evenly divisible by 2 (our first prime):

[2, 3,4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20]

Then we can eliminate everything evenly divisible by 3 (our second prime):

[2, 3,4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20]

We only have to go up to the square root of our target value (that's the number I mistakenly applied to a different problem previously) Since the square root of 20 is 4.x, we can stop at 4.

What's left is the set of primes less than 20:

[2, 3, 5, 7, 11, 13, 17, 19]

A Clumsy Approach

I'll admit that my implementation of the Sieve of Eratosthenes is a bit clumsy. But it works. You'll see that we have the same "isPrime" function that we used in Euler Problem #7 (although it's just on a single line here).

Then I calculate a set of "sievePrimes". This uses brute-force to get the prime numbers up to 1414 (which happens to be the square root of our target number).